You’ve launched your Agentforce experience. Congrats! But if there’s one thing we’ve learned at Salesforce, it’s this: success doesn’t start at go-live. It starts with what comes next.

As someone helping run Agentforce on our own Help site, I’ve seen firsthand what it takes to keep an AI agent smart, safe, and helpful long after go-live. Here’s what works for us when it comes to measuring answer quality, sustaining improvements, and building feedback loops that matter through ongoing agent evaluation.

Treat Agentforce like a teammate, not a ticket

The biggest trap? Thinking your work is done post-launch. Agentforce isn’t a “set it and forget it” tool. It’s a dynamic teammate that requires structure, oversight, and feedback.

We hold:

- Weekly reviews focused on performance: usage patterns, resolution rates, content gaps.

- Real-time monitoring, every 15 minutes, to catch errors early.

- Monthly checkpoints to zoom out and recalibrate.

These regular processes form the backbone of our agent evaluation framework. It’s a rhythm that mirrors how we manage dynamic products, because that’s what Agentforce is. We give it a roadmap. We ship updates. We evolve with our users.

If you don’t maintain it, quality slips. Fast.

Adopt the right AI mindset

See how thinking of AI as a teammate — not a tool — can transform your results. This blog shares strategies to start working effectively with AI today.

Define what “good” looks like, then build the system to support it

Before we could measure answer quality, we had to agree on what “quality” meant. For our Help agent, we landed on three core criteria:

- Completeness: Does the answer fully resolve the user’s question?

- Relevance: Is it actually addressing the question that was asked?

- Correct: Does the answer contain all the information required to solve the problem, and is it factually accurate?

These are simple, but they hold up under pressure. Your criteria might vary, product recommendations, next-best actions, escalation rules, but the key is alignment. Define the standard. Stick to it.

Use AI to test AI – yes, really

A core part of maintaining a smart and helpful AI agent is continuous agent evaluation, using a mix of automated testing, human review, and real-time monitoring to ensure quality stays high.

Here’s where it gets fun. We use AI to evaluate Agentforce’s own answers. There are a few ways we do this:

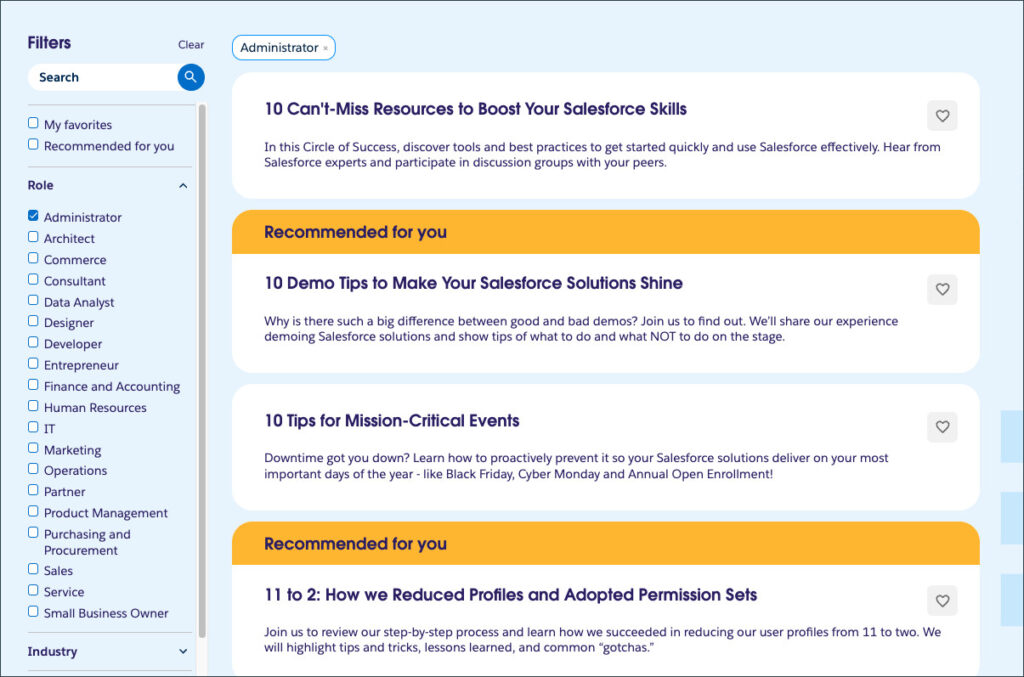

- Synthetic utterance testing: We pull thousands of historical customer questions from chat logs and case titles, then group them by intent using AI. From there, we generate test questions that represent real-world asks.

- Agents testing agents: One AI model generates answers. Another scores those answers using structured “judge prompts” that describe what a high-quality response looks like. It’s like peer review – but faster.

- Golden pairs: These are question-and-answer sets we’ve vetted as “ideal” responses. They serve as benchmarks to measure quality against. Useful for calibration and consistency though they need upkeep as your knowledge base changes. We’ve even built pass/fail rules into our judge prompts so we can automate quality checks at scale.

And soon, we’ll take this further with our new Testing Center and Interaction Explorer – Tools designed to help teams evaluate Agentforce performance with less manual effort.

Get the Agentforce Testing Guide

Launch your first test set in minutes using the Agentforce Testing Center Quick Start Guide. Step-by-step instructions help you create, upload, and automate evaluations.

Customers know what’s broken, ask them

Customer feedback plays a crucial role in our agent evaluation, providing real-world signals that complement internal testing. All the internal testing in the world won’t replace hearing directly from your customers. That’s why we run two feedback mechanisms on Help today:

- Customer Confirmed Resolution: After a user interacts with Agentforce, we ask, “Did this solve your problem?” A “yes” counts as a confirmed resolution.

- Experience Rating: For resolved cases, users can rate the interaction 1–5 stars. It’s a light signal, but a useful one.

Soon we are rolling out a simple like/dislike feature for individual answers, and sentiment analysis to help us understand tone even without direct feedback.

My rule of thumb: If you’re not hearing from users, you’re probably not asking the right way. Feedback needs to be designed into the experience, not left to chance.

See How Salesforce Measures Success

Learn which metrics we track across our Help site to improve performance, accuracy, and trust with Agentforce.

Don’t aim for perfect, aim for better

Agentforce isn’t something you set and forget. It’s an evolving part of your customer experience, one that improves through iteration and with the right structure.

We’ve seen steady gains by staying close to the data, setting clear expectations, and treating quality as a continuous process. That’s how resolution rates improve. That’s how trust builds.

Want to skip straight to hands-on learning? Join an Agentforce NOW virtual workshop, led by product experts, and start building, testing, and tuning your agent live — no experience needed.