Not All Agents Are the Same, Especially When It Comes To Enterprise Tasks.

Building AI agents for CRM is much more than deploying a Large Language Model (LLM). An enterprise agentic system needs to account for the appropriate workflows, access to data and privacy and security protocols. Yet, our latest research shows that simply connecting an LLM to an agentic framework doesn’t address many of the challenges in a complex enterprise environment.

To understand the extent of this gap, a new paper CRMArena-Pro, from our AI Research team evaluated top-performing LLMs using a generic agentic framework on complex CRM tasks in a realistic environment but without context from the enterprise data and metadata. Let’s call these ‘generic LLM agents’. The results show that these generic LLM agents achieve only around a 58% success rate in single-turn scenarios (giving a direct answer without clarification steps), with performance significantly degrading to approximately 35% in multi-turn settings (where agents follow up with clarification questions).

Why is this important? Because enterprise-grade agents – agents that are both capable and consistent in complex business settings – require a fundamentally different approach than generic LLM agents or a DIY (do-it-yourself) approach can provide. Without a robust agentic platform or architecture, generic LLM agents are simply not enterprise-ready.

Understanding Limitations in Generic LLM Agents.

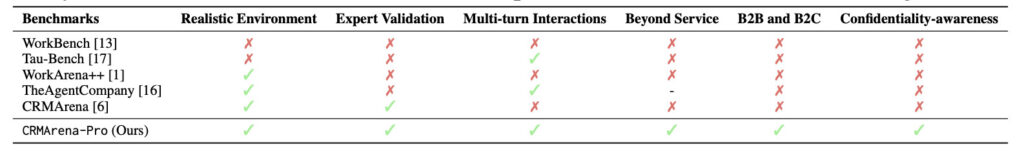

As enterprises increasingly deploy AI agents for business-critical tasks, existing benchmarks such as WorkBench and Tau-Bench fail to capture the complexity of real enterprise environments. Our CRMArena-Pro benchmark addresses this gap by providing a comprehensive evaluation framework that tests generic LLM capabilities across realistic business scenarios, validated by domain experts in both B2B and B2C contexts.

We evaluated leading frontier LLMs—including OpenAI, Gemini, and Llama models—across four critical enterprise capabilities:

- Database: Interacting with structured CRM data by formulating precise queries to retrieve specific customer, account, or transaction information

- Text: Searching through large volumes of unstructured content like knowledge bases, email transcripts, and call logs to extract relevant insights

- Workflow: Following established business processes and executing actions based on predefined rules and conditions

- Policy: Adhering to company policies, compliance requirements, and business rules

The results reveal significant gaps in enterprise readiness for generic LLM agents. While these generic LLM agents showed reasonable performance in workflow execution—with Gemini-2.5-pro achieving over 83% success in single-turn scenarios—their limitations become stark in more complex situations.

Multi-turn conversations exposed the most critical weakness. When generic LLM agents needed to gather additional information through follow-up questions, performance plummeted across all models. In nearly half of our test cases (9 out of 20), generic LLM agents failed to acquire all necessary information to complete their tasks, leaving business processes incomplete.

Most concerning for enterprise deployment: policy adherence failures. All generic LLM agents exhibited poor confidentiality awareness, meaning they struggled to recognize when information should be restricted based on user roles, data sensitivity, or compliance requirements. This represents a real risk for organizations handling sensitive customer data or operating under regulatory constraints.

The Agentforce Platform is More Than LLMs.

Enterprise-grade agents are only as strong as the data, intelligence, observability, and safeguards that power them—and that’s exactly what sets hyperscale digital labor platforms like Agentforce apart:

- Contextual data and metadata from Data Cloud grounds agents in real-time, company-specific information—enabling hyper-personalized and accurate responses. And with zero-copy architecture, we can connect to any data source providing flexible and trusted AI without duplicating or moving data.

- The Atlas Reasoning Engine acts as the brain, providing the intelligence needed to make smarter, faster decisions.

- The Command Center delivers full observability into agent performance—what they’re doing, how well they’re doing it, and where to improve.

- Salesforce’s evolving Trust Layer, embedded across the platform, ensures every action is governed by enterprise-grade standards for reliability, safety, and control.

- Agentforce delivers reliable, predictable automation by tapping into your existing business logic, workflows, and integrations — because it’s built on Salesforce’s deeply unified platform. It combines deterministic logic with agent-based reasoning, giving you both precision and dynamic responses.

Unlike generic LLM agents, Agentforce is an enterprise-grade agentic platform, where customers are seeing real, tangible value. This includes autonomously resolving 70% of 1-800Accountant’s administrative chat engagements during critical tax weeks in 2025, and increasing Grupo Globo’s subscriber retention by 22%. Agentforce equips leaders to monitor, improve, and scale their AI workforce with confidence.

Built for Enterprise. Designed to Unlock Human Potential.

Generic LLM agents — even with top performing models — fall short in enterprise environments. They lack the structured data, workflows, and safeguards needed to operate in high-stakes, real-world scenarios. Built on Salesforce’s deeply unified platform, Agentforce combines precision, adaptability, and trust — giving enterprises AI they can rely on.

And as powerful as AI agents become, one thing remains constant: humans must stay at the helm. At Salesforce, trust is our #1 value. We believe in building AI that’s safe, accountable, and accurate for everyone — but we also know technology alone isn’t enough.

Trust is a shared responsibility. It’s not just about what models can do — it’s about the choices people make with them. We can build guardrails, define ethical frameworks, and offer simulation and benchmarking tools like CRMArena-Pro — but the impact depends on how humans put them to work.

In this new era of enterprise AI, governance isn’t a feature — it’s a mindset. Agentforce puts control in human hands — not just prompts in model hands. Because the future of AI won’t be defined by models alone. It will be shaped by the platforms we build — and the principles we uphold together.

Acknowledgements

We would like to thank Jacob Lehrbaum, Kathy Baxter, Jason Wu, and Divyansh Agarwal for their insights and contributions to this article.