Over the past few months, we’ve conducted a sprawling investigation into Google’s inner workings.

It has led to major discoveries – some of which we’re disclosing here.

While we can’t reveal everything, the insights below offer a clearer view of how Google generates and ranks its results.

What ~1,200 experiments reveal about Google’s inner workings

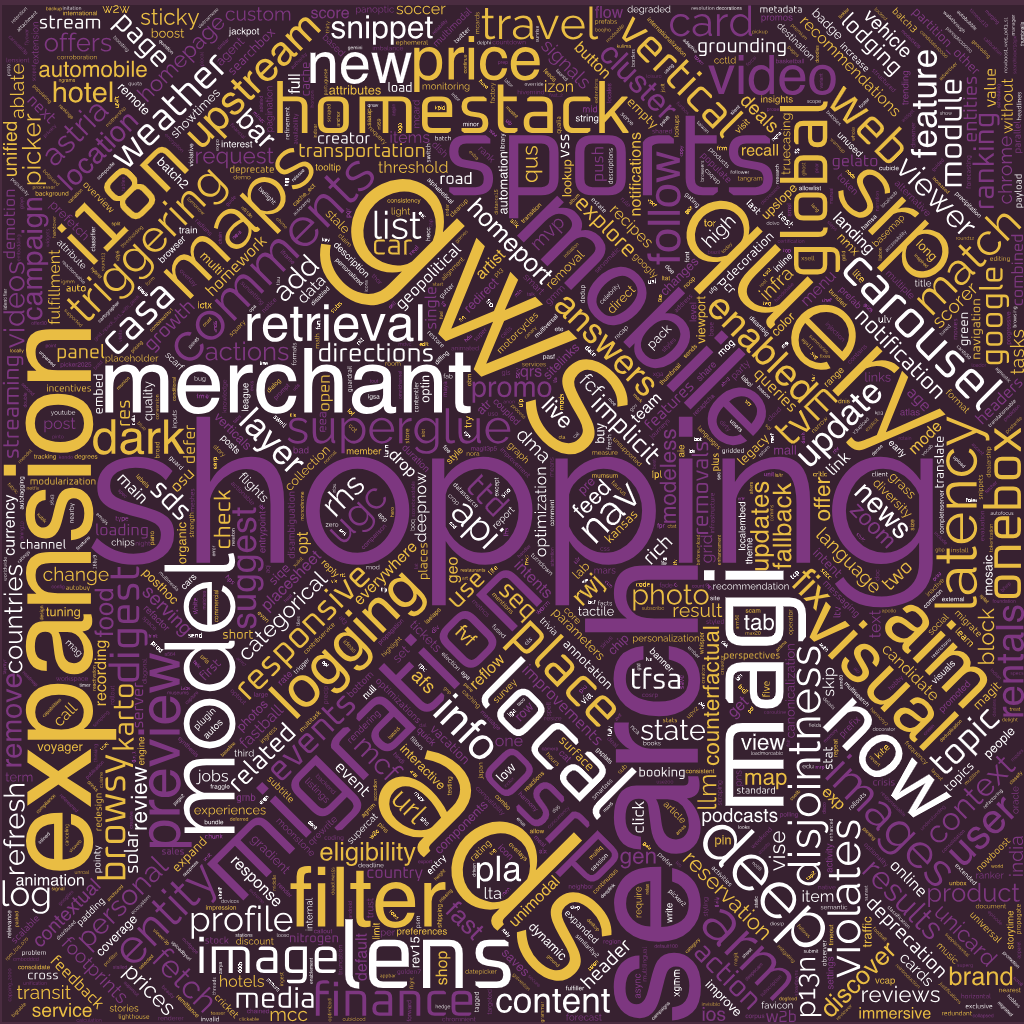

We obtained a list of nearly 1,200 Google experiments, over 800 of which were active as of June 2025.

This dataset confirms that many components revealed in the 2024 leaks – Mustang, Twiddlers, QRewrite, Tangram, QUS, and others – remain central to the system.

At the same time, it surfaces a wave of new and intriguing codenames, from Harmony and Thor to more evocative labels like Whisper, Moonstone, and Solar.

Among the most notable are DeepNow, a successor to Google Now with its counterpart NowBoost, and SuperGlue, which may replace Glue – NavBoost’s equivalent for universal search.

Unlike most websites, which undergo major overhauls every three to five years, Google evolves continuously.

There’s no big-bang “new version” – just a steady flow of micro‑changes that move from experiment to launch to full integration.

This explains the experiment list’s layered nature: months-old tests appear alongside brand-new trials, some already in their 15th iteration (e.g., MagiCotRev15Launch).

This incremental approach reduces risk – failed experiments impact only a small number of users – while enabling an innovation pace traditional redesigns can’t match.

The range of domains covered is striking:

- AI (including multiple Magi and AIM – or AI Mode – variants).

- Shopping (with over 50 dedicated experiments).

- Verticals like sports, finance, weather, travel, and more.

A clear pattern emerges – each vertical is assigned its own “domain,” such as ShoppingOverlappingDomain, TravelOverlappingDomain, or SportsOverlappingDomain.

These overlapping domains point to a sophisticated architecture where each product team operates within its own experimental space, enabling parallel testing without conflict.

See the full list of experiments here.

Entities everywhere

Entities play a pivotal role across Google’s entire ecosystem – a point explored in depth during a talk titled “Entities Everywhere,” delivered earlier this year in Marseille by Damien Andell and Sylvain Deauré of 1492.vision.

The presentation examined how the Knowledge Graph underpins services from Search to Discover, YouTube, Maps, and beyond.

The Knowledge Graph: Google’s central nervous system

Their research shows that the Knowledge Graph is far more than the side-panel assistant most users see.

It functions as the central nervous system of Google’s ecosystem – powering Search, Discover, YouTube, Maps, Assistant, Gemini, and AI Overviews.

Google treats data reliability as a core priority.

At the heart of the Knowledge Graph is Livegraph, which assigns a confidence weight to every triple it encounters before determining whether to admit it.

This obsessive focus on verification is reflected in a layered namespace hierarchy:

- kc: Data from highly validated corpora (e.g., official ages, government records).

- ss: Web-extracted “webfacts,” along with a few ok: shortfacts (less reliable but richer in coverage).

- hw: Information manually curated by humans.

This classification is far from cosmetic – it directly influences the confidence assigned to each fact and governs how that fact is used across Google’s services.

Ghost entities and real‑time adaptation

Among the most fascinating discoveries are so-called ghost entities – unanchored items that float in a buffer zone of the Knowledge Graph.

Unlike fully validated entities with stable MIDs, these temporary structures allow Google to react in near real-time to emerging events.

While conventional LLMs remain fixed to their training snapshots, Google can:

- Dynamically generate new entities.

- Validate them progressively.

- Surface them in results as needed.

Supporting this system are SAFT and WebRef, which – as revealed in the 2024 leaks – operate continuously to extract, classify, and link entities, helping Google build a comprehensive semantic representation of the web.

SEO implications: Become a validated entity

For SEO professionals, the takeaway is clear: your brand needs to exist as a validated entity within Google’s ever-expanding Knowledge Graph.

The 2024 leak revealed that Google vectors entire sites, calculating thematic-coherence signals – such as siteFocusScore and NSR – that penalize scattered or unfocused content.

Chrome data continually feeds into the Knowledge Graph, identifying visited entities, updating trust signals, and tracking emerging trends.

In this new reality, visibility depends less on content volume and more on whether your site represents an entity that is triangulated by multiple sources and deeply embedded in a coherent thematic graph.

For a deeper dive, read the full article and view the accompanying infographic in “Google Knowledge Graph Mining Pipeline.”

Inside Google’s AI Mode: 90 projects and a constellation of agents

A recent discovery provided access to what appears to be an internal Google debug menu – visible only on-corp or via VPN.

While Tom Critchlow had surfaced an earlier version in March, this newer build, dated May 28, 2025, reveals nearly 90 projects in development – an increase of over 40 from the previous list.

A constellation of ultra‑specialized agents

What stands out immediately is Google’s multi-agent strategy. Instead of building a single all-purpose assistant, the company is developing a constellation of ultra-specialized agents:

- MedExplainer for health.

- Travel Agent and Flight Deals for trips.

- Neural Chef, Food Analyzer, and Smart Recipe for cooking.

- News Digest and Daily Brief for news.

- Shopping AI Studio for commerce.

- And so on.

Project Magi: The backbone of AI Mode

Many of these experiments fall under Project Magi, Google’s internal name for AI Mode, with more than 50 active tests. The rollout appears highly structured:

- MagiModelLayerDomain: The core infrastructure.

- MagitV2p5Launch: Aligns with Gemini 2.5.

- SuperglueMagiAlignment: Mirrors the Glue system that tracks user interactions.

Perhaps most striking is MagitCotRev15Launch, already on its 15th iteration.

It implements a Chain-of-Thought technique, in which the AI reasons through five stages:

- Reflect → Research → Read → Synthesize → Polish.

AIM (AI Mode) and the new UI

The AIM project focuses on user interfaces with multiple entry points:

- AimLhsOverlay: An AI sidebar.

- SbnAimEntrypoints: Repurposing the “I’m feeling lucky” button as an AI gateway.

- Even the Google logo itself becomes interactive.

Meanwhile, Stateful Journey and Context Bridge confirm the LLM revolution – Google is moving from isolated queries to full conversational sessions.

Link to the full list: https://i-l-i.com/google-ai-mode-debug-menu.html

SEO takeaways

- Hyper‑specialization is crucial-content must match expert‑level agents.

- Multi‑modality is no longer optional; text, images, video, and structured data all feed these agents.

- Personalization reaches unprecedented depth, driven by session‑level context rather than single queries.

The profiling engine: Smile, you’re being embedded!

Our investigation reveals a hidden layer of Google’s infrastructure – one that transforms every digital interaction into a mathematical embedding: a vector that encodes the essence of your online identity.

At the center of this profiling system is Nephesh, Google’s universal user-embedding foundation.

Nephesh generates vector representations of your preferences and behaviors across all Google products.

As the 2024 leaks showed, these embeddings:

- Feed signals that assess whether you fit a “typical” or “atypical” profile.

- Estimate how likely you are to engage with specific content – based on the alignment between your interests and the vectorized features of that content.

Picasso and VanGogh: Dual embeddings for Google Discover

For Discover, Google deploys a two‑part embedding system named (with pseudonyms) Picasso and VanGogh:

- Picasso: Your long‑term memory, patiently analyzing months of interactions to build a persistent profile. It uses two time windows: STAT (recent interests) and LTAT (long‑term passions).

- VanGogh: Runs on‑device, capturing real‑time signals-device state, latest queries, even how far you scroll.

These twin systems coordinate to balance your immediate needs with your deeper interests.

A constellation of specialized embeddings

Beyond Picasso‑VanGogh, Google maintains a constellation of specialized embeddings:

- Vertical embeddings (i.e., podcasts, video, shopping, travel).

- Temporal embeddings (real‑time, short‑term, permanent).

- Contextual embeddings that adapt to situational cues.

Google’s HULK system takes behavioral analysis to the extreme.

It detects whether you’re IN_VEHICLE, ON_BICYCLE, ON_STAIRS, IN_ELEVATOR, or even SLEEPING – using these signals to interpret and anticipate user context in real time.

It also identifies frequently visited places – such as SEMANTIC_HOME and SEMANTIC_WORK – and uses that data to predict future destinations and personalize results accordingly.

Query understanding: Query expansion and real‑time scoring revealed

Another notable breakthrough concerns Google’s query‑expansion engine and a mysterious real‑time scoring layer.

Through a method we’ll keep confidential , we captured how your queries are transformed:

- For instance, in “cycling tour france,” “cycling tour” instantly becomes the consolidated bigram “cyclingtour” and fans out to “bicycle,” “bike,” and “trips.”

- Special markers, such as

iv;pandiv;d, appear.iv;pfor in‑verbatim exact matches.iv;dfor linguistic derivations.

Geographic intelligence

For a query like “nail salon fort lauderdale 17th street,” the system:

- Maps geo‑categories (

geo:ypcat:manicuring) and zone codes (geo;88d850000000000). - Expands address variations.

- Translates certain terms on the fly when your location suggests local intent-even if that’s not your default language.

These findings confirm that the 2024‑leaked architecture.

- GWS → Superroot → Query Understanding Service (QUS) → QBST is still live.

- Ongoing experiments such as GwsLensMultimodalUnderstandingInQusUpstream and QusPreFollowM1InQResS run upstream.

Real‑time term scoring

The same leak exposes a scoring grid where each term gets 0–10 points per URL:

- Stop‑words are ignored.

- Title terms earn bonuses.

- Named entities consistently hit maximum scores.

- Crucially, scoring is pairwise. The same term can receive different scores for the same URL depending on query context.

This aligns with Google’s documented Salient Terms process – context‑weighted metrics such as virtualTf, idf, and salience refine the lexicon.

These lexical scores don’t decide final ranking.

NavBoost, freshness, and other factors dominate-but they illuminate how queries are interpreted and weighted in real time.

The information presented comes exclusively from publicly available sources obtained without bypassing access controls or intrusion. It is published for informational purposes only.