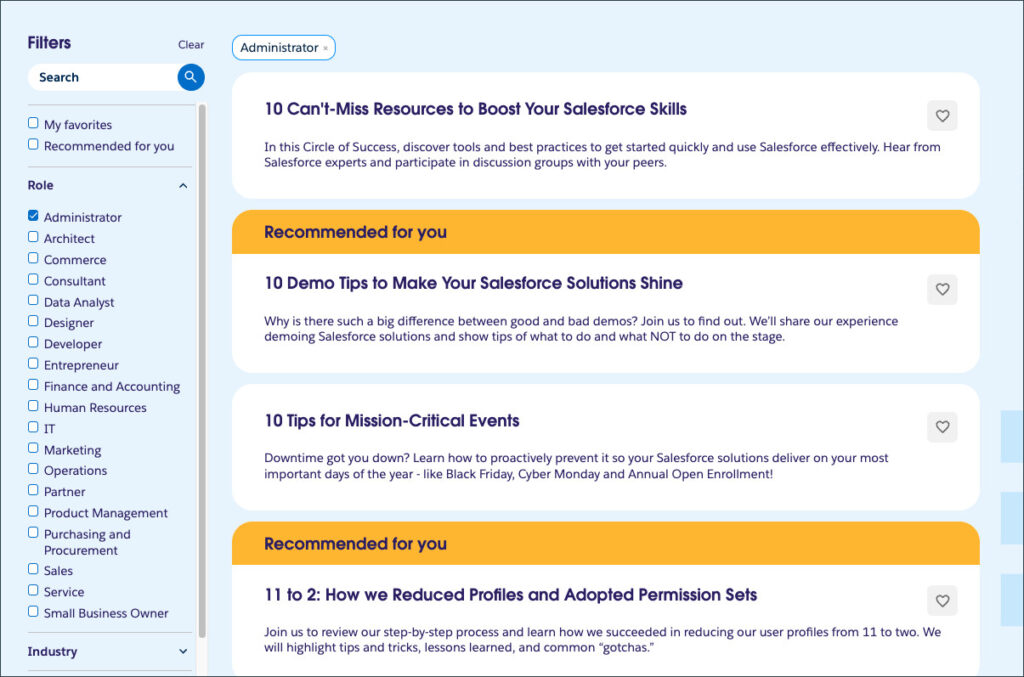

Large language models (LLMs) excel at understanding and acting on written language. When combined with an agentic layer like Agentforce, they can take action on a user’s behalf. Until recently, they have been limited to text. But with the rise of multimodal LLMs, you can create agents that consider images and files when answering a question or building a plan.

In our latest Agentforce Decoded video, you’ll learn how to build a general-purpose action that enables your agents to use images and PDFs as context. We’ll walk you through a practical example — parsing receipts to create expense reports — and show you how this capability opens up new possibilities for your agentic workflows. You can use this same approach to allow your agents to troubleshoot issues from screenshots, parse contracts, or analyze documents at runtime.

To learn more about building multimodal workflows and agent actions, check out the resources below.

Resources

About the author

Charles Watkins is a Lead Developer Advocate at Salesforce. You can follow him on LinkedIn and GitHub.

The post How to Build a Multimodal Agent in Salesforce appeared first on Salesforce Developers Blog.