In modern Ops and SRE, the pressure is relentless. Teams grapple with stringent Time to Resolution (TTR) targets for production incidents, navigate a vast and ever-expanding landscape of applications and services, and often face critical knowledge bottlenecks where vital information is siloed or hard to find during high-pressure situations. To tackle these challenges head-on, Salesforce developed OpsAI Agent, an AI-powered agent designed to augment our internal Ops teams.

This post details how we architected and built this solution by leveraging the power of Agentforce as the AI orchestration layer, Salesforce Data Cloud as the central knowledge and data hub, and Slack as the intuitive user interface.

The challenge: Information overload and resolution delays

The core issues that OpsAI Agent aims to solve stem from the pressures of modern IT operations. These include aggressive Time to Resolution (TTR) targets that leave little room for diagnostic or remediation delays, impacting service availability. Teams also grapple with the inherent complexity of vast and ever-evolving systems, making it impractical for every member to possess universal expertise. Furthermore, critical knowledge often remains hidden within extensive documentation or past incident reports, leading to time-consuming manual searches during active incidents. This is compounded by data silos, where valuable operational data from logs, metrics, and traces is not readily transformed into actionable intelligence. Finally, the challenge of scaling human expertise to match the growing number of applications and alerts presents a significant operational bottleneck.

Architectural pillars: Agentforce, Data Cloud, and Slack

OpsAI Agent is built upon three interconnected key pillars.

- Agentforce acts as the central nervous system, hosting the AI models like the Atlas Reasoning Engine, orchestrating workflows through Topics and Actions, managing conversational states, and defining the assistant’s reasoning and response mechanisms

- Supporting this is Data Cloud, which serves as the knowledge foundation by ingesting, processing, and indexing information from diverse enterprise sources

- Finally, Slack provides a familiar UI for Ops engineers to interact with OpsAI Agent, receive alerts from monitoring systems, and get findings, resolution steps, and documentation summaries directly within their existing chat workflows, thereby minimizing context switching

The OpsAI Agent workflow: From alert to insight

OpsAI Agent integrates natively with Slack. While we’ll focus on the core Salesforce technologies, understanding the high-level interaction flow via Slack is key:

- Alert ingestion: An application alert appears in a monitored Slack channel.

- OpsAI activation: The OpsAI Slack application detects and parses this alert.

- Session with Agentforce: The Slack app initiates a secure session with the Agentforce platform, passing along the extracted alert details.

- Agentforce orchestration: Agentforce takes over, classifying the request into a topic, triggering relevant actions (like knowledge retrieval from Data Cloud), and engaging the Atlas Reasoning Engine to formulate a response based on predefined instructions.

- Response delivery: The generated insights, summaries, or resolution steps are sent back to the Slack app, which then presents them in a structured format within the original Slack thread.

- Feedback loop: Users can provide feedback on the assistant’s responses directly in Slack, which is relayed back to Agentforce for continuous improvement.

This flow ensures that the power of Agentforce and Data Cloud is accessible directly where the operations teams collaborate.

Inside OpsAI Agent: Agentforce actions and the Atlas Reasoning Engine

The real intelligence of OpsAI Agent resides within the Agentforce platform and its interaction with Data Cloud. Here’s how it works:

- Building the knowledge foundation in Data Cloud: OpsAI Agent’s ability to provide relevant information hinges on a comprehensive and well-structured knowledge base.

- Diverse data sources: We ingest data from Confluence, a history of previous incident data, and relevant Google Drive documents.

- Data ingestion and vectorization: This information is fed into Data Cloud, and the data is ingested into an unstructured data lake object (UDLO). From the UDLO, a search index is created that generates vector embeddings for the textual content, enabling semantic search. This creates a rich, queryable set of “knowledge documents” ready for Agentforce.

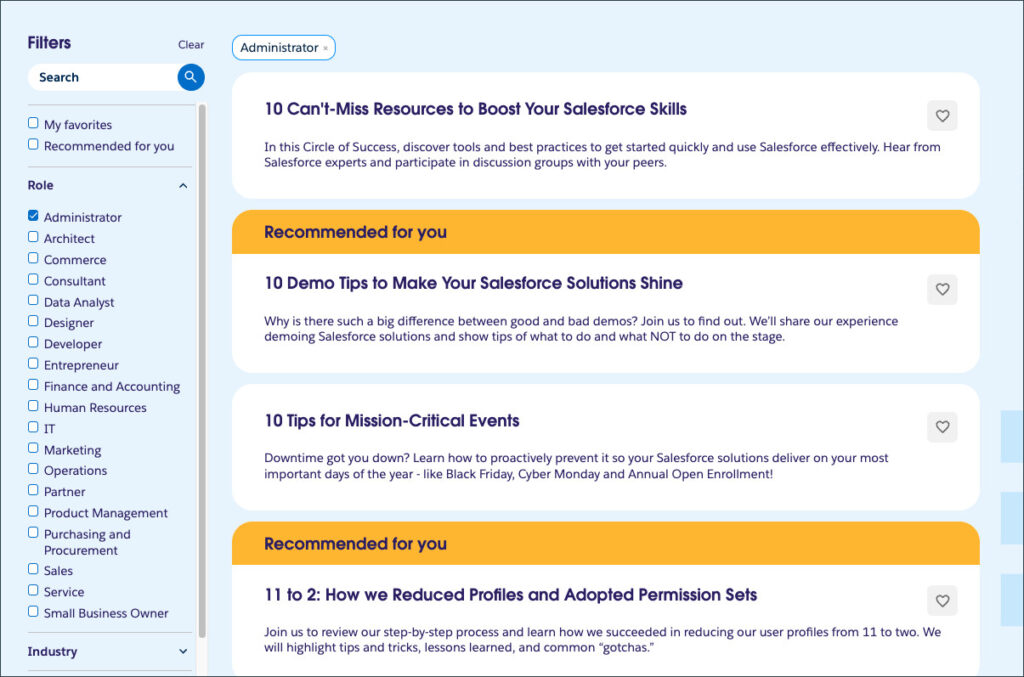

- Agentforce topic classification and action orchestration: When a request (an alert or a direct query) arrives from Slack, Agentforce first performs NLP-driven topic classification, which routes the request to the appropriate set of instructions and actions. For example, a user asking “Summarize the design of application X” would trigger the “Search Confluence documentation” topic, while an alert for “Database connection error in application Y” would trigger the “Find error resolution for the application alert” topic.

- Retrieval Augmented Generation (RAG) via Hybrid Search in Data Cloud:

A cornerstone of OpsAI Agent’s intelligence is its RAG capability, powered by hybrid search in Data Cloud. This is primarily handled by Apex code invoked by Agentforce actions. TheDataCloudHybridSearch.clsApex class is a key component.- The

performHybridSearchinvocable method: This method is designed to be called from Agentforce actions and takes a search query as input.

Thehybrid_searchfunction in Data Cloud combines traditional keyword search with semantic vector search, returning results ranked by a blendedhybrid_score__c. TheDataCloudSearch.clssimply wraps theConnectApi.CdpQuery.queryAnsiSqlV2call to execute this ANSI SQL against Data Cloud.

- The

public with sharing class DataCloudHybridSearch {

@TestVisible

private static DataCloudSearchHelper helper = new DataCloudSearchHelper(); // Helper for query execution

public class FlowInput {

@InvocableVariable(required=true)

public String searchQuery;

}

@InvocableMethod(label='Get Hybrid Search Results'

description='Performs Hybrid search and returns the results for documentation from data cloud'

category='Data Cloud')

public static List<List> performHybridSearch(List inputs) {

List<List> allResults = new List<List>();

for(FlowInput input : inputs) {

List searchResults = new List();

try {

// Construct the SQL for hybrid search

String sql = 'select d.PageId__c as PageID, d.Text__c as Body, d.Title__c as Title, d.Url__c as URL, ' +

'c.Chunk__c, h.hybrid_score__c, h.keyword_score__c, h.vector_score__c ' +

'from hybrid_search(table(Int_EIP_index__dlm), '' + // Target Data Lake Object

String.escapeSingleQuotes(input.searchQuery) + // User's query

'', '', 100, '{ "min_should_match": "-100%"}') as h ' + // hybrid_search parameters

'join Int_EIP_chunk__dlm as c on h.SourceRecordId__c = c.RecordId__c ' +

'join Int_EIP_dlm d ON c.SourceRecordId__c = d.PageId__c ' +

'ORDER BY h.hybrid_score__c desc limit 15';

// Execute the query using a helper class

ConnectApi.CdpQueryOutputV2 output = helper.executeQuery(sql, 'default'); // 'default' is the data space

for (ConnectApi.CdpQueryV2Row row : output.data) {

// Format and add results (Title, URL, Content snippet, Score)

String resultString = 'Title: ' + (String)row.rowData[2] + 'n' +

'URL: ' + (String)row.rowData[3] + 'n' +

'Content: ' + (String)row.rowData[1] + 'n' + // Or c.Chunk__c for the specific chunk

'Score: ' + (Decimal)row.rowData[5]; // hybrid_score__c

searchResults.add(resultString);

}

} catch(Exception e) {

System.debug('Error in hybrid search: ' + e.getMessage());

searchResults.add('Error: ' + e.getMessage());

}

allResults.add(searchResults);

}

return allResults;

}

}

- Applying instructions to context with the Atlas Reasoning Engine: The search results (relevant document chunks) retrieved by the hybrid search are then fed as context to the Atlas Reasoning Engine within Agentforce. This engine uses these retrieved chunks along with the specific instructions defined for the active topic.

For the Search Confluence Documentation topic, the instructions guide the engine to identify the application name and use the hybrid search results to summarize technical design, examples, important links, notable technical details, and related systems, formatted with headers and bullet points.

For the Find error resolution for the application alert topic, the instructions are more elaborate:

- First, identify the application name and alert details from the user’s input (<Error>)

- Then, explicitly trigger the hybrid search (Get Hybrid Search Results action) to fetch related documentation and information from previous incident resolutions for that application.

- Finally, using the original error, the retrieved documentation, and retrieved past incident resolutions as context, generate a structured response covering:

- Root cause analysis

- Resolution steps

- Prevention measures

- Related documentation (links)

- Point of contact

These detailed, topic-specific instructions ensure that the Atlas Reasoning Engine doesn’t just perform a generic search, but instead synthesizes information in a way that is directly applicable to the operational task at hand, be it understanding documentation or resolving an active alert.

Outcomes and learnings

The introduction of OpsAI Agent has yielded significant positive outcomes for Salesforce’s Ops teams. We’ve observed a measurable reduction in the median Time to Resolution for certain classes of incidents, and the assistant has also proven invaluable for onboarding new team members, providing them with an interactive way to query our knowledge bases and understand complex application designs. Knowledge discovery has improved, as previously hard-to-find information in Confluence or Google Drive is now surfaced contextually.

Building OpsAI Agent with Agentforce and Data Cloud has been a transformative initiative. By combining Agentforce’s AI orchestration and reasoning capabilities with Data Cloud’s robust data ingestion and hybrid search for RAG, all surfaced through a familiar Slack interface, we’ve created a powerful agent for our Ops teams. This solution not only helps resolve incidents faster, but it also empowers our engineers by making collective knowledge more accessible and actionable. As we continue to refine OpsAI Agent and expand its knowledge base and capabilities, we’re excited about the future of AI-augmented operations in enhancing efficiency, resilience, and engineering productivity.

Resources

- Documentation: Agent API Developer Guide

- Documentation: Data Cloud Connect API documentation

- Postman: Agent API Postman Collection

- Slack: Set up and manage Agentforce in Slack

About the authors

Sudhanshu Joshi is a Lead Software Engineer at Salesforce, focusing on building robust AI applications and scalable APIs that enable seamless integration and connectivity for Salesforce. He is a full-stack developer and enjoys working with LLMs, AI Agents, APIs, DevOps, and open source projects. Follow him on LinkedIn.

Sravan Kumar Vazrapu is a Senior Engineering Manager at Salesforce, spearheading innovation at the intersection of scalable enterprise APIs, data integrations and AI-driven solutions. With a proven track record of leading high-impact engineering teams, Sravan drives complex digital transformation solutions by empowering his engineering teams to deliver consistent, streamlined solutions that simplify integration challenges and accelerate business impact. Follow him on LinkedIn.

Shoban Kandala is a Senior Software Engineer at Salesforce, specializing in designing intelligent, scalable APIs and integration solutions leveraging MuleSoft and AI technologies. He operates at the forefront of enterprise connectivity and automation, enabling seamless data flow across complex systems. Passionate about harnessing AI agents and LLMs, Shoban drives innovation to simplify integration challenges and accelerate digital transformation. Follow him on LinkedIn.

The post How Salesforce Built a DevOps AI Agent with Agentforce and Data Cloud appeared first on Salesforce Developers Blog.