Traditional user experience (UX) work assumes you’re designing screens that will be built exactly as drawn. But generative development is changing that assumption entirely. With AI systems now able to assemble interfaces and create dynamic user experiences on demand, designers must move beyond familiar practices to embrace this fundamental change.

Instead of crafting single, finished designs, designers now have to understand how to build and guide systems that continuously generate new outcomes based on user needs and context. For an ontologist like me, taming complexity with structure and organization is the answer – and designers ought to lean in. Let’s unpack what that means.

Here’s what we’ll cover:

What is generative development?

Evolve from old UX practices

Upskill to semantic thinking

Immerse yourself in ontology

Design for modular systems, not screens

Embrace being a curator

What is generative development?

At its core, generative development means designing experiences that adapt, respond, and even co-create with the user. These aren’t static or scripted experiences. Instead, they’re powered by models that generate new content, responses, or pathways based on user input, behavior, or context. It’s a shift from designing for every state to designing for a range of possibilities.

In this rules-over-pixels world, a design system gives us a visual library and an ontology (or structured knowledge) adds the logic model that teaches machines how to combine those visuals dynamically.

Here’s how it works: AI draws from design tokens (or styling hooks), components, and knowledge-base content to create surfaces that adapt to any context in real time. Design tokens provide the visual building blocks – shape, color, spacing – while ontology gives those elements meaning and purpose. When the system understands that a “PrimaryButton” signals importance and a “QuietLink” offers subtle navigation, it can make intelligent decisions about which element fits each moment.

Designing for adaptability is new territory that includes shaping system behavior, writing effective prompts, defining tone and personality, and building in ethical guardrails that ensure AI-generated content is helpful, relevant, and safe.

Evolve from old UX practices

Designing systems that understand both what something is and how it should behave radically changes how UX teams work. So, what does it mean to navigate generative development practices as a designer?

Here are some ways your work is transforming:

| Then | Now | Example |

|---|---|---|

| Design a fixed artifact. | Design the rules, constraints, and semantics that let the system generate many artifacts. | Instead of drawing a single onboarding screen, define an OnboardingFlow class with rules such as “show WelcomeStep only once” and “skip AddressStep if ShippingCountry is blank.” The generator then assembles the right sequence for each user. |

| Hand off as pixels. | Hand off as ontology additions, design tokens, and test assertions. | Deliver a Turtle snippet that declares Checkout.TotalPriceCard, its required children (hasPrice, hasCTA), and the color‐scale tokens it must use—plus a constraint test that fails if any child is missing. |

| Focus on page‑level polish. | Focus on component semantics, adaptability, and governance. | Rather than tweaking hero spacing for a marketing page, model a PromoBanner container that knows it can swap images or text based on Locale and CampaignPriority, guaranteeing consistent behavior across every surface. |

| Accessibility check at the end. | Accessibility rules are encoded in ontology so every generated view is compliant by construction. | The ontology asserts PrimaryButton hasAriaLabel exactly 1. During continuous integration, a reasoning test blocks any layout where a button lacks its aria-label, catching the issue in advance. |

Upskill to semantic thinking

How designers grow into these new responsibilities starts with thinking semantically. Instead of just asking whether a button looks good, you’ll need to ask whether the system knows what the button is for, where it belongs, and how it should behave across contexts. This enables you to envision scalable, generative design where AI systems can assemble and adapt experiences in real time with accuracy, consistency, and intent.

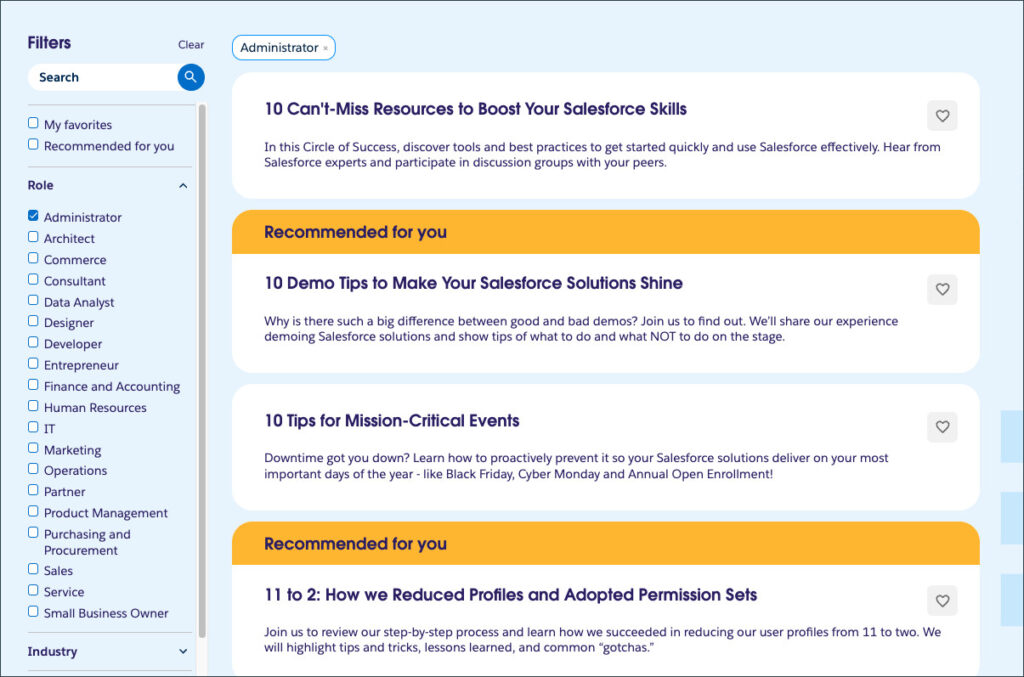

In your review cycles, the focus on static mockups needs to shift to dynamic scenarios. For example, teams may run component designs through a validation suite that surfaces any ontology rule violations. This helps test how the system performs across different scenarios and contexts.

Moving forward, it’s essential to equip designers with semantic tools and ontological frameworks that help teams move from creating style guides to developing system guidance. It’s not just about making things look right. It’s about making sure they mean the right thing, every time.

Immerse yourself in ontology

Generative AI needs structure to create meaningful design experiences. That’s where I advocate for ontology as a solution.

An ontology structures concepts and information through the description of classes, properties, attributes, relationships, and logical axioms. Ontologies form data models for knowledge graphs, ensuring consistency and understanding. Basically, an ontology is a system that organizes information so machines can understand it.

Here’s how ontology supports generative development:

- Common language: It creates a common language or vocabulary. When every service, agent, and tool refers to “Button,” “Toast,” or “CheckoutIntent” the same way, systems can communicate effectively across your product ecosystem.

- Logical constraints: Logical constraints bring structure. You can declare, for example, that a “Toast” must reference exactly one message, or that a “PrimaryButton” should appear only once per view.

- Constraint language: In practice we encode the constraint in SHACL (Shapes Constraint Language), which flags any generated toast missing its text before it ever reaches production. These constraints make it easier to generate interfaces that are valid, usable, and aligned with real-world needs.

- Traceability and explainability: Every module carries an IRI (Internationalized Resource Identifier), giving it a stable, searchable identity across software builds and repositories. By assigning “a fingerprint” to design modules, an ontology makes it easy to track reuse (“Where else is this pricing table used?”) and impact (“What other flows inherited this change?”).

- Clarity: Because every layout is built from explicit, structured relationships, the system can tell you why it made a decision. “DeviceType equals mobile, so we inferred a CardStack layout.” That level of clarity matters when you’re collaborating with AI.

Design for modular systems, not screens

It’s not news that generative AI needs building blocks that can adapt to any given context. This means centering on modular content containers. Every user interface (UI) element becomes a container with defined structure and logical constraints. A pricing card, for example, gets a class like Checkout.TotalPriceCard, with required children like hasPrice or hasCTA, and rules governing their order and limits.

Codified, it looks like:

- Checkout.TotalPriceCard a UIContainer; hasPrice PriceField; hasCTA CheckoutCTA.

These containers stay flexible enough to recombine across contexts and are structured enough for systems to understand their purpose.

Take Duolingo’s Max subscription. Instead of predetermined lessons, the AI adapts to what learners say and do, responding with contextual guidance and dynamic conversations. That adaptation relies on classes like LessonIntent, ErrorFeedback, and ProficiencyLevel, which the ontology uses to pick the next prompt in real time. The experience becomes a fluid exchange, not a fixed path.

Similarly, design tokens live as data properties, moving with components to ensure consistency. This approach also enables the system to flag conflicts, warning you when two components claim the same token name but define different values.

Design for systems instead of screens, and you enable experiences that are responsive to user intent and built for change.

Embrace being a curator

To thrive with generative development, designers, ontologists, and engineers need to collaborate. Design workshops become collaborative sessions where you capture the rules, constraints, and examples that teach machines how to think about design. This creates a shared language between humans and AI, so the system understands not just what to show users, but why and when to show it.

Designers become curators, checking AI-generated screens for edge cases, tone problems, and accessibility issues. Create a review process where every new component gets added to the system’s knowledge base first, checked for conflicts, and only then made available for the AI to use.

Most importantly, measure success by what the system understands, not just what it produces. Run tests to make sure that in any situation, the system can create layouts that actually work for users. When you focus on teaching systems the rules of good design instead of just making things look good, you enable design that gets smarter and scales effortlessly.