Boosting LLM Performance with Tiered KV Cache on Google Kubernetes Engine

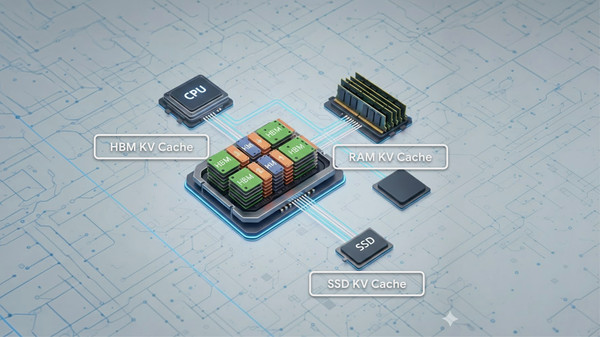

Large Language Models (LLMs) are powerful, but their performance can be bottlenecked by the immense NVIDIA GPU memory footprint of the Key-Value (KV) Cache. This cache, crucial for speeding up LLM inference by storing Key (K) and Value (V) matrices, directly impacts context length, concurrency, and overall system throughput. Our primary goal is to maximize […]

Boosting LLM Performance with Tiered KV Cache on Google Kubernetes Engine Read More »