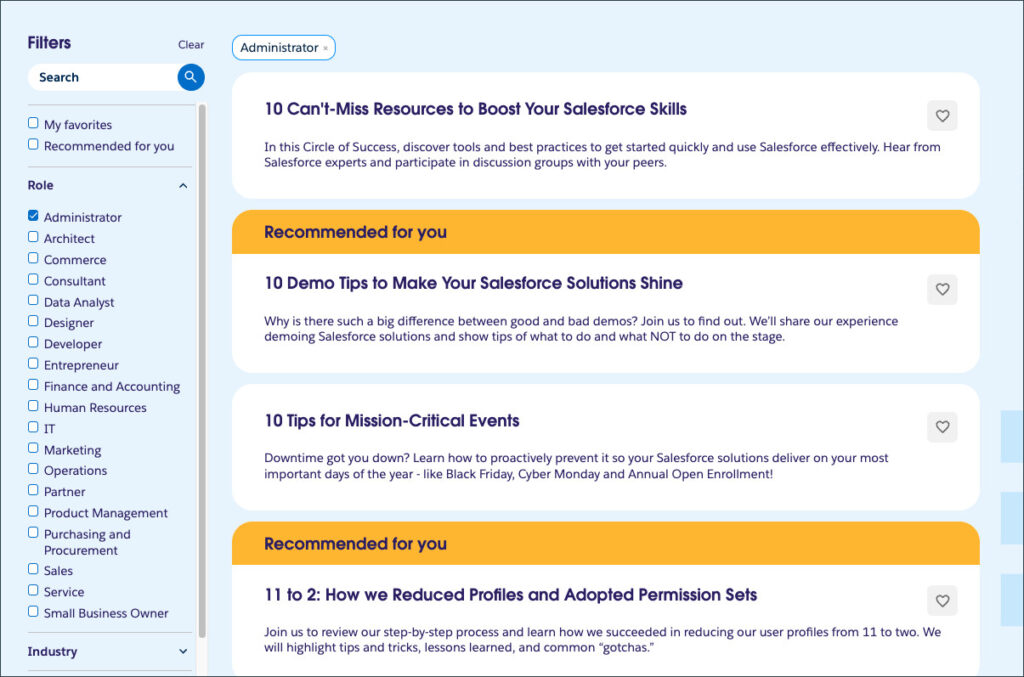

The Customer Success Scorecard (CSS) helps our customers better understand their use of Salesforce products. Whether it’s implementation details or license activations, you can track progress over time and double-click on specific areas of your organization’s performance.

As you can imagine, this takes a lot of data: telemetry, business metrics, CRM data, and more. Data Cloud lets us bring all of this data into one place, where we can run machine learning models to give meaning to this data.

One question we get all the time from customers is, “How do we build our own Customer Success Scorecard?” While we’ve been happy to share our journey in past posts, this is the first time we are showing everything you need in step-by-step detail to set up a miniature version of CSS from scratch!

Let’s start with a manifest of everything we will present here:

A simplified version of our scoring algorithm built in Python

Infrastructure code to spin up all necessary AWS services

A simulation of a simple AWS use case with data in S3 and models in Amazon SageMaker

A tutorial on streaming our data to Data Cloud and connecting our model to Einstein Studio (below)

Some of this falls outside the purview of a Salesforce Developer. But don’t worry! All of these pieces have been automated such that you don’t need any specific background to follow along. That said, we’ll give you an overview of the components, so you can see the workflow of a data science project and bring this knowledge to your own development.

Architecture

Before diving into the technical details, let’s go over the big picture. This is a greatly simplified version of the real CSS behind the scenes.

We store a lot of information in Salesforce itself, which is synced to Data Cloud: telemetry data and product data flow from S3.

From there, we can trigger prediction jobs in Einstein Studio when the data changes.

These jobs take the new data and send it to AWS, where we have our custom model that responds with a prediction.

The predictions are stored again in Data Cloud. On the AWS side, we have an API gateway that acts as an authentication proxy for our model hosted in SageMaker endpoints.

How does this architecture serve us? There are three key elements that drive value for us. Firstly, our source of truth is defined in a single place: Data Cloud. Secondly, we bring our model to the data. This means that we aren’t moving large amounts of data back and forth between different environments just for predictions; we only predict on records that have changed. Lastly, our mechanism for delivering values exists within our CRM. Putting these predictions back into Salesforce lets us use them in the flow of work shortly after they are emitted. This drives consistency and allows us to act quickly on our insights.

Step 1: Build the ML back end in AWS

Salesforce offers some awesome tools to build no-code machine learning models, but sometimes we need to define bespoke models that we still want to use in Salesforce — such as the one outlined above. Fortunately, Einstein Studio lets you bring your own model into Data Cloud. You can connect data from Salesforce to other ML platforms, such as Amazon SageMaker, and Einstein Studio handles the passing of data to and from the low-level APIs.

Let’s get started by walking through a Jupyter notebook, the most common data science interface, that we provide in our codebase. There are detailed instructions in the README to set up your environment and run the code. Return to this blog post after the code has been executed!

Step 2: Connect data to Data Cloud

We’ll start with a custom object in Sales Cloud called AccountMini that will store some sample account information: age and size. These values are coded to be random values and have a name that aligns with our data in S3. In our case, we manually loaded the first 5,000 records from peer_dims.csv in the repository as sample data. If you are following along exactly, we recommend that you do the same.

Now, we’ll load this data into Data Cloud. We’ll create a new data stream and select Salesforce CRM.

Set the category to Profile and make sure that the types for age and size are the correct number for both.

After clicking through the wizard, you will have a data stream object and a data lake object (DLO). Create a data model object by mapping the values in the DLO to a custom data model object (DMO).

We’ll now set up two data streams corresponding to the two S3 objects that we set up in the AWS portion of the tutorial. First, we need to configure the S3 connection in the Data Cloud Setup.

Navigate to Other Connectors and New, where you will find the option for a new S3 connection.

Next, walk through product_data (the process for usage_data is identical). Now, you can select Amazon S3 as the source.

Enter the following values to configure the stream:

Connection: CSSMiniBucketConnection

Bucket name: css-mini-123456789 (replace with the bucket from the AWS demo)

File details: CSV

Directory: product_metrics/

File name: *.csv

Source: AwsS3_CSSMiniBucketConnection

Data lake object label: product_metrics

Category: Profile

Primary key: acct_id

Record modified field: last_updated

Refresh mode: Upsert

Frequency: 5 Minutes

You can map your fields to a new DMO as you did for the CRM object. No special configuration is required there. You can then repeat the process for the second S3 source: usage_data.csv.

With our data sources streaming in, we will create relationships between the DMOs such that we can relate each object to the other. Navigate to the Data Model tab and select our AccountMini DMO. Within Relationships, define two new relationships to our S3 streams. These should be 1:1.

And now, our data is fully configured! Feel free to use the Data Explorer to query your data and get a feel for the information we are pulling in.

Bring your model to Einstein Studio

This section shows how we can receive our CSS Scores within Salesforce. We will configure the model and create a prediction job. Let’s get started!

Start by selecting the Einstein Studio tab and creating a new object. For the type of model, select Connect an Amazon SageMaker model.

Add CSS Mini as the name of the endpoint. For the URL and secret key, copy your API URL and API Secret Token from the Jupyter notebook. We’re choosing this pattern for simplicity; in production, we recommend more advanced security measures like OAuth or JWT. We’ll pass and receive JSON formats to the endpoint.

On the next page, we’ll map our inputs and outputs. This can be a little time-consuming, so we’ll list these as a table where you can copy and paste data into fields. First is inputs (remember that order matters here!).

Name

API Name (Autofilled)

Type

acct_age

acct_age

Number

acct_size

acct_size

Number

activation_rate

activation_rate

Number

bots

bots

Number

email2case

email2case

Number

engagement_rate

engagement_rate

Number

lightning_utilization

lightning_utilization

Number

ltnflows

ltnflows

Number

macro

macro

Number

penetration_rate

penetration_rate

Number

quicktext

quicktext

Number

utilization_rate

utilization_rate

Number

web2case

web2case

Number

workflowrules

workflowrules

Number

workflows

workflows

Number

For outputs, you should have the following. The order does not matter here because of how the response JSON is formatted. We exclude API Name, which is auto-filled for brevity.

Name

Type

JSON Key

consumption_engagement_rate_score

Number

$.predictions[*].consumption_engagement_rate_metric_score

consumption_activation_rate_score

Number

$.predictions[*].consumption_activation_rate_metric_score

consumption_penetration_rate_score

Number

$.predictions[*].consumption_penetration_rate_metric_score

consumption_utilization_rate_score

Number

$.predictions[*].consumption_utilization_rate_metric_score

auto_bots_score

Number

$.predictions[*].auto_bots_metric_score

auto_email2case_score

Number

$.predictions[*].auto_email2case_metric_score

auto_ltnflows_score

Number

$.predictions[*].auto_ltnflows_metric_score

auto_macro_score

Number

$.predictions[*].auto_macro_metric_score

auto_quicktext_score

Number

$.predictions[*].auto_quicktext_metric_score

auto_web2case_score

Number

$.predictions[*].auto_web2case_metric_score

auto_workflowrules_score

Number

$.predictions[*].auto_workflowrules_metric_score

auto_workflows_score

Number

$.predictions[*].auto_workflows_metric_score

lightning_utilization_score

Number

$.predictions[*].lightning_lightning_utilization_metric_score

global_score

Number

$.predictions[*].global_score

auto_component_score

Number

$.predictions[*].auto_component_score

consumption_component_score

Number

$.predictions[*].consumption_component_score

lightning_component_score

Number

$.predictions[*].lightning_component_score

last_modified

String/Text

$.predictions[*].last_modified

After saving, your model is ready to use in production! You can even access it in other Salesforce automation tooling like Flows. To mimic CSS, we want our source of truth to exist in Data Cloud, so we’ll show you how to create a prediction job that streams inputs to the model as they change.

Automating prediction

In the final step of this tutorial, let’s connect the model to data. Start by making sure that your model in Einstein Studio is in the Activated state.

Then, you can click over to Integrations on that same page, where you’ll find a list of all prediction jobs. Click on New and then select our AccountMini DMO.

We’ll now map entities in the DMO to the expected input to the model. However, our account-like object does not have all the data we need. We’ll need to add the additional objects that leverage the relationships we set up previously.

Under Confirm Selection, choose Add an Object. Then, you can add your usage_metrics and product_metrics DMOs.

It’s straightforward to map these objects, but just to be sure, see the explicit mapping below.

Model Schema

Input Object

Input Field

acct_age

AccountMini

acct_age

acct_size

AccountMini

acct_size

activation_rate

product_metrics

activation_rate

bots

usage_metrics

bots

email2case

usage_metrics

email2case

engagement_rate

product_metrics

engagement_rate

lightning_utilization

usage_metrics

lightning_utilization

ltnflows

usage_metrics

ltnflows

macro

usage_metrics

macro

penetration_rate

product_metrics

penetration_rate

quicktext

usage_metrics

quicktext

utilization_rate

product_metrics

utilization_rate

web2case

usage_metrics

web2case

workflowrules

usage_metrics

workflowrules

workflows

usage_metrics

workflows

Select Streaming for the Update type, and leave the default setting that any field will trigger an update.

By finalizing that menu, you have created a job. Select Activate to deploy it to production. This will trigger a job that will run after a few minutes, and load data into a DMO of the same name. You can visit Data Explorer to see your data streaming in once the job status reaches the Success state.

Conclusion

Congratulations! You built and deployed your own mini Customer Success Scorecard! This comprises a simple end-to-end architecture delivering machine learning insights at scale. But it doesn’t end there. You can use this output and data to create dashboards in Tableau and leverage these insights in CRM by passing the data back to Salesforce objects.

We hope that you found this valuable and are able to find the same success we have as you take these learnings and apply them to your own systems.

Resources

Salesforce Developers Blog: Connect Salesforce Data Cloud to Amazon SageMaker

Salesforce Developers Blog: Work with Data Cloud Data using Amazon SageMaker ML Capabilities

Salesforce Developers Blog: Bring Your Own AI Models to Data Cloud

AWS Blog: Use the Amazon SageMaker and Salesforce Data Cloud integration to power your Salesforce apps with AI/ML

About the Authors

Collin Cunningham is the Senior Director of Internal Machine Learning Engineering at Salesforce. He has over ten years of experience with data science and machine learning systems. Connect with him on LinkedIn.

Nathan Calandra is a Lead Machine Learning Engineer at Salesforce, building infrastructure and platforms for data scientists. He has ten years of experience in software engineering with a focus on all things data. Connect with him on LinkedIn.

The post Build Your Own Customer Success Scorecard with Amazon SageMaker and Data Cloud appeared first on Salesforce Developers Blog.