How can AI enhance productivity across our 10,000-person global professional services organization? Our Global Delivery Architecture team has spent the last 18 months answering that question. We began by embedding generative AI into our flow of work and later became one of the first Salesforce teams to build a collaborative, multi-org Agentforce experience internally. Throughout this journey, from initial concept to a live system, the principles of the Salesforce Well-Architected Framework provided our north star, ensuring that our solutions were trusted, easy, and adaptable. Now, we want to share what we’ve learned, using the framework to structure our key takeaways.

Project overview

Agentforce, part of the Salesforce Platform, provides powerful tools to build autonomous agents quickly, allowing architects to focus on the key to enterprise success: ensuring that agents are trusted, easy to use, and adaptable. This requires a solid architectural approach to address unique considerations, such as non-deterministic behavior, new data access patterns, and natural language interfaces.

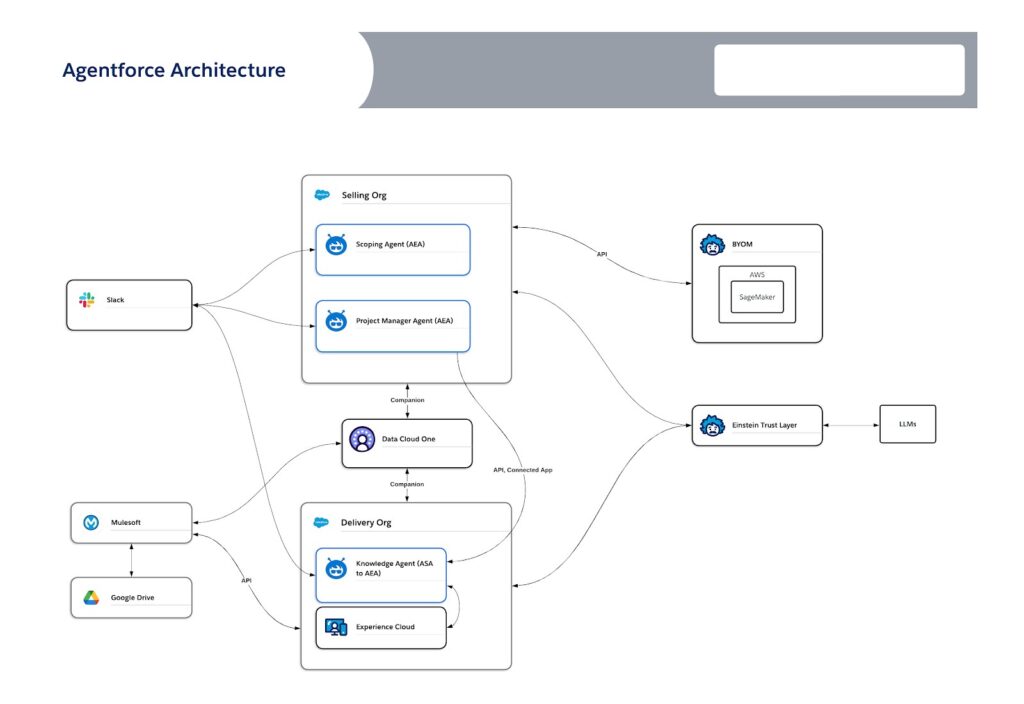

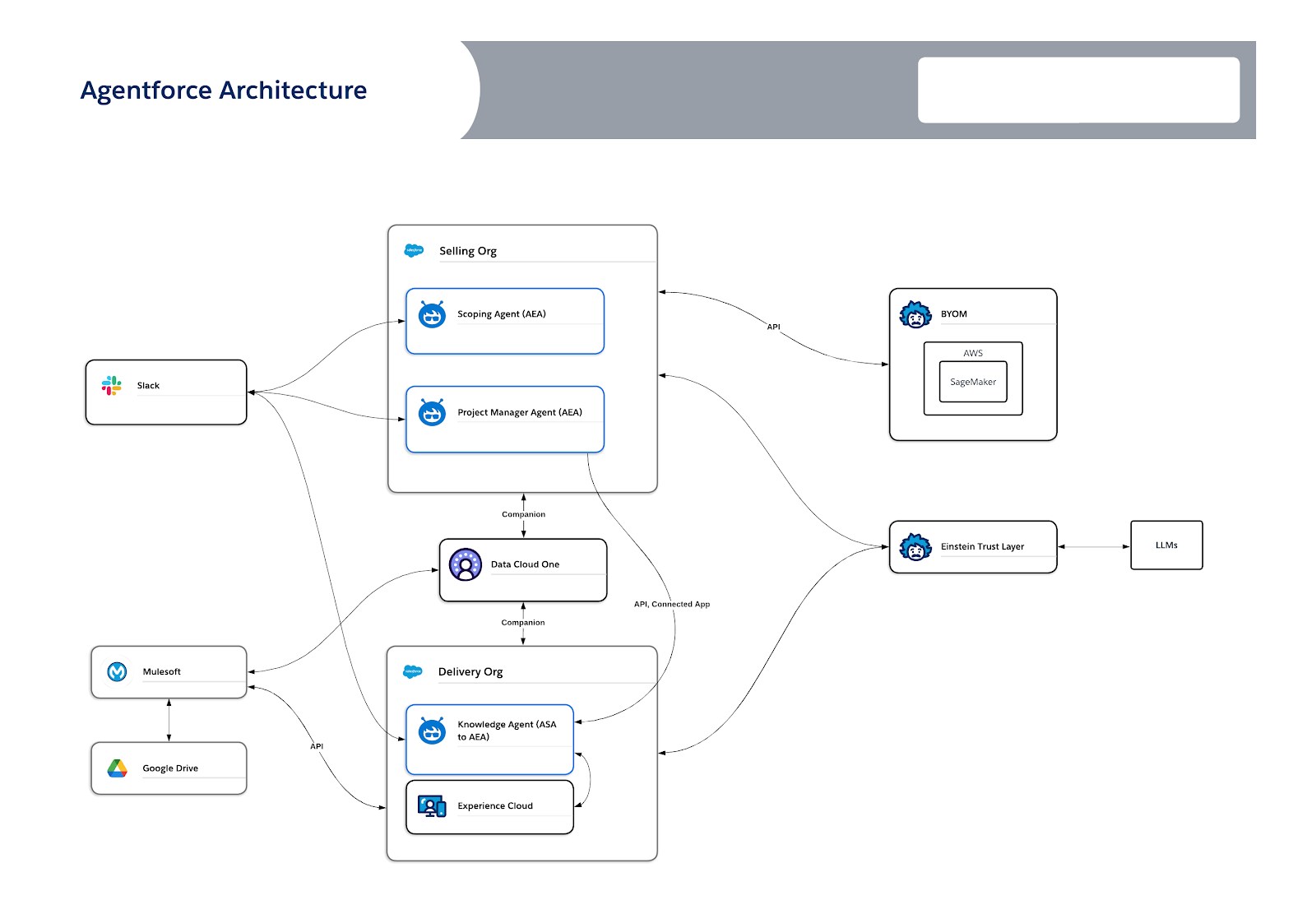

In this article, we’ll examine the Well-Architected Framework’s three pillars through the lens of the Agentforce implementation that we did in-house for the Salesforce Professional Services organization. Our implementation will serve as a real-world example, consisting of three specialized agents, a Knowledge Management Agent, a Scoping Agent, and a Project Management Agent. They are deployed across two Salesforce orgs exposed through multiple channels, including our internal Salesforce orgs, an Experience Cloud site, and Slack, and supported by Data 360 and MuleSoft. See the diagram below.

Well-Architected Pillar 1: Trusted

The Trusted pillar of Well-Architected demands that solutions be secure, compliant, and reliable. Architects make this principle a reality through design choices in areas like data classification and failover, which directly protect customer data and guarantee business dependability. When building our Agentforce implementation for Professional Services, trust was our top priority. We therefore engineered the system specifically to meet the security, compliance, and reliability demands of an enterprise-scale, autonomous Agentforce platform.

Secure

A secure agent is built on a three-tiered “defense-in-depth” strategy: first, by classifying data for automatic protection; second, by selecting a secure processing boundary; and third, by ensuring that the agent’s permissions are fit for purpose.

- Data classification: The first step is the implementation of field-level data classification in the Salesforce metadata. Setting the Compliance Categorization on sensitive fields provides explicit instructions to the Einstein Trust Layer, the secure gateway for all LLM interactions on the platform. This classification is the primary control for Automatic Data Masking, where the Trust Layer intercepts and redacts sensitive data before it is sent to an LLM. Our Knowledge Management Agent needed to be able to answer questions about employees and their skills, and this required us to identify which fields we considered PII data, for example Name and Email address and to update our metadata fields data classification accordingly.

- Trust boundary patterns: With data classification in place, the selection of an appropriate trust boundary is a critical architectural choice. Each pattern represents a distinct design:

- Shared trust boundary: Best for lower-risk use cases where access to external models is a priority. The security of this pattern relies entirely on data classification and the Trust Layer’s masking capabilities. This is the pattern we selected for the Knowledge Management, Scoping, and Project Management agents, making sure that we had our data classifications in place. Additionally, we also made sure that any sensitive data contained in any unstructured data we sent to the LLM was cleaned from the files themselves prior to sending them across. For example, we leveraged a MuleSoft architecture to clean any files contributed with sensitive information in them.

- Salesforce-hosted boundary: The recommended, secure-by-design option for agents that handle sensitive data or have strict data residency requirements, as all data and processing remain within the Salesforce ecosystem.

- Bring Your Own Model (BYOM): Provides maximum control for highly-regulated industries, but shifts the responsibility for securing and managing the model’s infrastructure to the implementing organization. This is the pattern that we will be adding for predictive use cases that we are exposing through our agents, allowing us to train our own model for predictions based on our own data.

- Agent context: Depending on whether the agent is made available inside an authenticated context or in an unauthenticated context, different agents types with their own data access considerations apply, for example:

- Agentforce Employee Agent (AEA): A key architectural advantage of the unified Salesforce Platform is that an employee agent inherits the user’s permissions as it runs in the user’s context, automatically respecting all object permissions, field-level security, and sharing rules. Given that we are in an authenticated Salesforce org scenario for our Scoping and Project Management agents, this is the agent type we picked for both of these agents.

- Agentforce Service Agent (ASA): These agents run in the context of their own designated user (which is the same for all end users of the agent). This means that the actions that this agent has access to must make sure that they scope the data access appropriately. For our Knowledge Management Agent, we initially picked this agent because we expose our knowledge base on an Experience Cloud website as initially this was the only model supported in a MIAW set up. We are moving to an AEA however, so that we can more easily expose our agent in Slack.

Compliant

Beyond secure, a trusted agent must also operate in a compliant manner, adhering to legal and regulatory boundaries. This requires verifiable controls to prove adherence to regulations like GDPR or HIPAA.

- Data residency: The agent’s trust boundary pattern is the primary control for meeting data residency requirements. For the most stringent needs, architecting the solution on the Salesforce-hosted boundary within a geo-specific Hyperforce instance is the verifiable choice. For our Agentforce instance, a key architectural decision was to build in our existing Salesforce orgs, which reside in the U.S. Our decision was based on a risk assessment of our initial use cases, which we classified as lower risk. For future use cases involving stricter data residency regulations, our strategy could lead us to using the Salesforce-hosted boundary within a geo-specific Hyperforce instance.

- Observability: The Einstein Trust Layer is central to compliance enforcement and provides two functions that are critical for auditors:

- It enforces a contractual Zero-Retention policy with external LLM providers, ensuring that client data is not stored or used for training.

- It creates an immutable audit trail of every prompt, response, and data interaction, which is then stored in Data 360. This log provides the necessary evidence to answer any auditor’s questions about who accessed what data and when.

When we first launched our Knowledge Management Agent, there were restrictions for Data 360 1 that did not allow us to use the Data 360-based observability data. We initially solved that by logging all questions and answers in our Salesforce org to have the necessary traceability. Now, however, observability is fully supported for companion orgs, and we are switching to the data that is logged in Data 360 and exposed through Agentforce Studio.

Ethical

In terms of ethical standards, users should never be misled into thinking they are interacting with a human. They should also be made aware that content is being generated and might not be accurate. When we planned to allow our Knowledge Management Agent to help find experts inside our Professional Services organization, we asked permission from the HR team to be able to query and show employee skills prior to building and releasing this functionality.

Reliable

For an agent to be reliable, the agent’s architecture must account for the non-deterministic behavior of LLMs and for inevitable technical failures. On top of that, one of the key predictors for perceived reliability is the accuracy and value of the data that the agent has access to in order to produce its output.

When designing for graceful failure of our Agentforce implementation, we considered the following aspects:

- Automated recovery: The use of intelligent LLM failover handles provider outages, providing a first line of defense that reroutes requests to ensure business continuity. We are not currently leveraging this functionality for our Agentforce instance based on our risk assessment of the current use cases.

- Graceful degradation: When a generative task fails, the agent could fall back on a deterministic skill. For instance, if our Knowledge Management Agent is unable to provide a summarized answer or interpret the question, it can resort to a keyword-based search and return a set of results.

- Intelligent escalation: For intents beyond an agent’s capability, the design must include a seamless handoff to a human that transfers the full conversation context. We initially experimented with a handoff to a specialized agent for certain types of queries, however, we could not yet pass the full conversation context. In our use cases, we do not have access to human agents to hand off to, instead we suggest relevant Slack channels to continue the conversation.

- Transparent failure: As a last resort, the agent should fail honestly with a clear error message that acknowledges the problem and suggests an alternative path.

Data quality

Of course, technical resilience is only one piece of the reliability equation. An agent is ultimately a reflection of the data it uses, and no amount of failover logic can compensate for flawed information. Recognizing this, we made data quality a foundational step in our agent design. For our Knowledge Management Agent, this meant starting with the most highly-rated knowledge in our knowledge base, excluding data that we were not sure of. For our Scoping Agent, we leveraged AI-preprocessing to prepare our data for predictive tasks, and for the Project Management Agent, we invested in building new data points that enable it to assess project health. Each agent’s performance is directly tied to this data investment, proving that robust data governance processes are a foundational step in building reliable agents.

Well-Architected Pillar 2: Easy

The Easy pillar ensures that solutions are intentional, automated, and engaging, so that they deliver tangible value to the business and end users. For our agents, this meant aligning each one to a specific business context to solve the right problem in the right way. This focus prevents wasted effort, improves adoption among our colleagues, and translates directly to the quality of the conversational experience.

Intentional

An intentional design defines the strategic purpose of the agent before development begins. It’s about making deliberate architectural choices to ensure that the agent solves the right problem effectively.

- Persona-driven agent: We formally defined personas for each of our agents to guide their tone and interaction style. For example, the persona for our Knowledge Management Agent initially started as a “Salesforce Architect” to mirror our early users. As the agent’s skills expanded to handle more complex Professional Services questions, the persona evolved into a “Delivery Professional”: knowledgeable, efficient, and collaborative. Such personas dictate how agents interact, ensuring that they feel like a helpful team member rather than just a tool.

- Conversation design: Leveraging our HCC team’s conversation design approach, we intentionally designed welcome messages for our agents and explored creating a more personalized and contextualized experience based on user roles and page location.

- Design-led approach: We adopted a design-led approach using Elements.cloud. Before writing a single prompt, we mapped the end-to-end business process that the agent needed to support. For example, for our Scoping Agent, we mapped the entire process from initial request to draft statement of work. This process map is then used to generate the agent’s core instructions, ensuring that the conversational flow is directly tied to a proven business workflow. All three of our agents are built on this foundation.

Automated

An “easy” solution uses the right automation for the right task. The architect’s role is to place each agent capability at the correct point on the determinism spectrum — from fully predictable to intelligently flexible.

- Designing on the determinism spectrum: Our primary architectural pattern is to use non-deterministic prompts for understanding and interpretation, which then trigger deterministic actions.

- Non-deterministic example: A user asking the Project Management Agent, “What’s the status of project xyz?” requires the agent to interpret the vague request, understand the user’s context, and synthesize information. This is handled by a flexible prompt template.

- Deterministic example: A request like “Add 8 hours to my timesheet for project xyz today” demands a highly deterministic action. The agent confirms the details and then reliably calls a standard flow to create the time entry.

- Guardrails: Non-deterministic automation also implies using guardrails. For deterministic flows, the logic itself contains the guardrails and boundaries. However, for the agent’s non-deterministic abilities, the guardrails need to be written directly into its core instructions. An example of one guardrail we applied is: “Only answer questions that you can answer based on context data provided, do not answer if you cannot answer based on the results.”

- Unifying automation for end-to-end workflows: The true power of our agents comes from orchestrating different types of automation through the Atlas Reasoning Engine. Our Knowledge Management Agent exemplifies this pattern. When a new technical document is submitted, the agent orchestrates a multi-step process:

- An AI-powered prompt summarizes the document content for a new knowledge article.

- A deterministic flow populates the summary into the correct fields of the Knowledge Article object.

- A second AI-powered prompt analyzes the summary and suggests relevant topic tags.

- A final deterministic flow applies the confirmed tags to the article record.

This provides the best of both worlds: an intelligent, context-aware interaction backed by predictable and reliable business processes.

Engaging

An engaging agent is the direct result of an intentional, architected design. It guides users, operates within safe boundaries, and builds trust through clarity.

- Create clear instructions: To prevent user frustration, our agents immediately state their capabilities. For example, the opening message for our Project Management Agent is: “Hello, I’m your Project Management Assistant. I can provide project status updates, flag risks, or help you log your time. What would you like to do?”

- Provide guardrails: An agent must operate within a defined scope in order to be trustworthy and, by extension, engaging. For our agents, guardrails are a critical architectural component for safety and dependability. For example, our Scoping Agent is explicitly prohibited from making financial commitments to prevent business risk. If asked to promise a price, it safely deflects the request by stating its limitations. Similarly, to ensure that it is a safe and equitable tool, our Knowledge Management Agent has guardrails that prevent it from answering questions about employees beyond their skills profile.

- Give explicit confirmation: Before executing an action that modifies data, our agents are designed to get explicit confirmation. The Project Management Agent, for instance, will ask: “To confirm, you want to add a two-week risk to the project timeline for ‘Client UAT’. Is that correct?” The agent only proceeds after receiving an affirmative response.

Well-Architected Pillar 3: Adaptable

The Adaptable pillar is about resilience to change and composability, allowing solutions to gracefully evolve with business needs. Every architectural decision involves trade-offs. Designing for adaptability means those trade-offs are made consciously, so that agents continue to deliver value as requirements and technologies change (which they inevitably will).

Resilient

In the context of adaptability, resilience is about effective agent lifecycle management (ALM). Our agents require a process for managing change that is safe and scalable. We found a design-first lifecycle management process to be the most effective approach. Here are the key stages in this process:

- Design & Document: The agent’s logic is first defined in our process mapping tool, which serves as the official blueprint. When we need to add a new capability, such as enabling the Scoping Agent to access a new data source, the process flow is first updated in the diagram.

- Govern & Approve: Stakeholders review and approve the proposed changes against the new design. This creates an audit trail before any configuration is touched, and once approved, we can generate new instructions directly from the diagram.

- Implement & Test: With an approved blueprint, we configure the agent in a sandbox. We use Agentforce’s Testing Center to validate performance, and as a final quality gate, we deploy a beta version of the agent into production. This allows a select group of users to test the new functionality against live production data, ensuring that it performs as expected before a full rollout.

- Deploy: The final configuration is promoted through our standard, governed CI/CD pipeline.

A design-first lifecycle needs data to guide it. A feedback loop provides this data, turning deployment into a cycle of continuous improvement.

- Feedback loop: We have implemented a feedback mechanism directly within Slack, allowing end users to give a simple thumbs-up or thumbs-down rating after an interaction with the Project Management Agent. This provides immediate, actionable data on response quality. While this capability is not yet available in the standard Messaging for In-App and Web (MIAW) UI, we plan to adopt it as soon as it is released.

- Formal governance: This feedback is operationalized through a formal governance process. Business and technical stakeholders meet regularly to review performance analytics and feedback patterns. This function allows us to make data-driven decisions, prioritize a backlog of new agent skills and corrections, and feed those priorities directly into our ALM cycle.

Composable

A composable architecture, built from independent and interchangeable components, is central to our adaptable agentic ecosystem. Our entire architecture is decoupled, leveraging MuleSoft with Data 360 and Agentforce.

- Reusable actions and logic: The foundation of our composable design is a library of discrete actions. However, we’ve taken this a step further by designing an entire agent as a reusable component. Our Knowledge Management Agent, which lives in its own dedicated org, is engineered to be a modular, invocable source of truth.

- Interoperability and multi-agent orchestration: Our composable architecture allows our agents to interoperate. Our Scoping Agent and Project Management Agent, which live in separate Salesforce orgs, can call the Knowledge Management Agent as an action. When the Scoping Agent needs the latest best practices for a specific technology, it doesn’t need its own knowledge base; it simply calls the Knowledge Management Agent to benefit from its specialized skills and data. This pattern allows us to build specialized agents that do one thing well and share their capabilities across the enterprise.

- Decoupled design: This agent-to-agent communication is possible because of a decoupled design that separates logic from the presentation channel. This “build once, deploy everywhere” strategy is key to our architecture. The core logic of our Knowledge Management Agent can be surfaced in an Experience Cloud site for self-service, exposed in Slack for end users, or called by another agent, all without refactoring its fundamental design.

Conclusion

Building effective autonomous agents is enabled by architectural planning. The unique challenges they present — around data security, reliability, and adaptability — cannot be an afterthought. Applying the principles of the Well-Architected Framework enables architects to implement the necessary rigor to design and build Agentforce solutions that are trusted, effective, and designed to evolve.