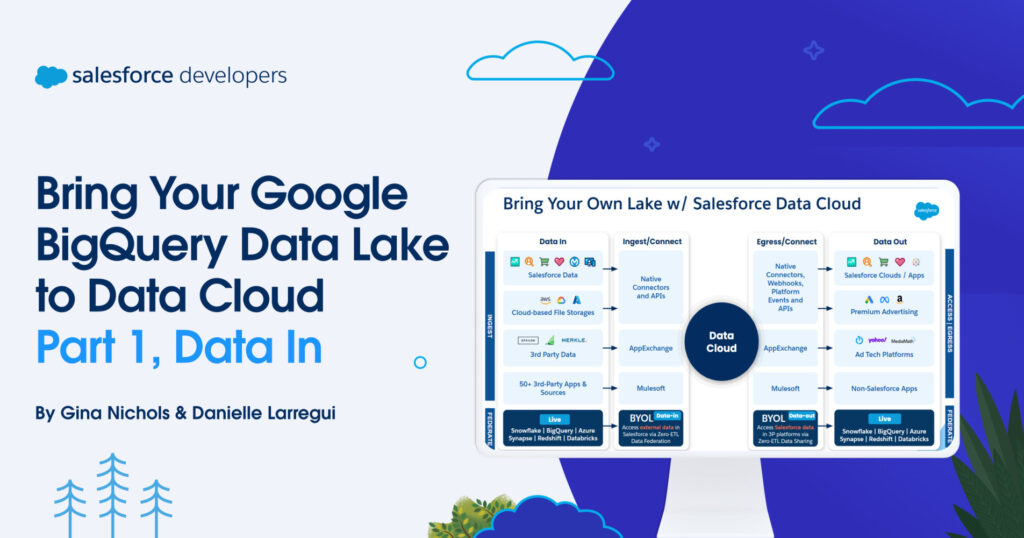

Thanks to our partnership with Google, we are happy to announce that developers can now connect their Google BigQuery enterprise data warehouse to Salesforce Data Cloud without having to rely on an exact, transform, and load (ETL) process. This seamless integration is bi-directional and allows for data-sharing with Zero Copy approach.

This post is the first of a two-part series on using Google BigQuery with Data Cloud. In this post, we’ll show you how to share data from Google BigQuery with Salesforce using Data Cloud’s Zero Copy Integration data shares capabilities.

What is Google BigQuery?

Google BigQuery is a fully managed, serverless data warehouse that enables scalable analysis across petabytes of data. It is a part of the Google Cloud Platform, providing a powerful SQL engine to run fast queries against large datasets. Developers use BigQuery to perform big data analytics, harnessing Google’s infrastructure to analyze data in real time. They can also integrate BigQuery with other Google Cloud services or third-party tools to ingest, store, and visualize data.

Zero Copy (data-in) Data Federation

Data Cloud users can now directly connect to tables in Google BigQuery using a new Salesforce Data Cloud connector, and this connectivity is facilitated using query federation. This capability ensures that Google BigQuery external tables are accessible and operable within Data Cloud as external data lake objects. These external data lake objects contain only the metadata of the Google BigQuery object that it’s trying to read data from.

Once the external data lake object is set up, it can be mapped to the data model object (DMO). This mapped DMO can then be used in identity resolution, segmentation, calculated insights, queries, etc. during which Data Cloud seamlessly federates over JDBC to query live data from Google BigQuery.

This integration also allows an “acceleration” feature which provides users with the option to cache their data in Data Cloud, facilitating the storage of queried data. This enhances performance in instances where real-time access to the data is not necessary. Users could select this feature in scenarios where they have high data volumes and low data change rates.

Create Your Service Account User and Generate Private Key

Now that you have an understanding of Google BigQuery, Salesforce Data Cloud, and Bring Your Own Lake functionality, let’s take a look at how to set up your connection between Google BigQuery and Data Cloud. In Google Cloud, you will first need to navigate to “IAM & Admin” and select “Service Accounts”.

Then click “+Create Service Account”.

Enter in a service account name and click “Create and Continue”.

Grant the newly created service account permissions to big BigQuery. In our example we are granting the “BigQuery Admin” and “BigQuery Connection Admin” roles. As a best practice follow the principle of least privilege access.

Click the actions icon next to the service account name and then “Manage Keys”.

Click “Add Key” then “Create new key”.

Choose “JSON” as the key type.

Take a note of where the key file gets stored. In my example, mine went to my downloads folder.

How to set up Google BigQuery Connector in Data Cloud

In Data Cloud Setup click “Other Connectors”.

Click “New”.

Select “Google BigQuery.”

Enter a “Connection Name”, and a “Connection API Name” will automatically populate. Upload the private key that you downloaded into “SSH Private Key”. Enter the email address from your private key file in the “Service Account Email” field and the project Id from the file in the “Project ID” field. After you have entered all of the values click the “Test Connection” button to test your connection between Salesforce Data Cloud and Google BigQuery.

When you have fully set up the Google BigQuery connection your connection will show under More Connectors as pictured below.

Create a Data Stream

The final thing to do is to create a Data Stream to ingest data via our Google BigQuery connection. Once the connection to Google BigQuery has been set up, navigate to Data Cloud > Data Streams > New. Then select Google BigQuery as your source and choose Next.

Next, select the BigQuery Connection you set up in Step 1, then select the database within Google BigQuery. Once selected, the available schemas in that database are automatically populated on the left.

Select the schema that contains the data that you want to use in Data Cloud. Once selected, all the objects in that schema are populated on the right, ready for selection. Select the objects that you would like to use in Data Cloud. Then click Next.

Note: You can select multiple objects to map to Data Cloud in this step.

Once the objects have been selected specify the name, object category, the primary key, the record modified field, and the organization unit identifier. The record modified field has to be a date-time field, and it is used to indicate when the row was last changed in Google BigQuery. The organization unit identifier is used to specify business units for lineage purposes. Select the fields that you would like to use in Data Cloud, and then click Next.

Select which data space you would like this object to belong to. You can also specify any filters that you would like to apply to this data. For example, if you were bringing in product data and only wanted to bring in data from a specific set of departments, you can set the filter here. Optionally you can choose to enable “acceleration.” Enabling acceleration allows Data Cloud to cache the data from the source BigQuery object to improve performance. Click Deploy once done.

Note: Acceleration makes this data lake object available to some features that can’t use external data lake objects as source data. Using acceleration impacts the consumption of credits used for billing. The Acceleration cache can be refreshed based on the schedule shown in the screenshot below.

Map your data

Once the data stream has been successfully deployed, the external data lake object is now available for mapping to the Data Cloud data model.

Next, map the external data lake object to the standard data model, or create a custom data model object if needed.

Once mapped, this object is available for identity resolution, segmentation, and activation — similar to how existing data model functionality works.

Conclusion

The seamless integration of Google BigQuery with Data Cloud using the Zero Copy strategy represents a monumental shift in how developers approach data management. By effectively breaking down data silos, this approach not only streamlines the aggregation and analysis of diverse data types, but it also significantly enhances the ability to deliver personalized customer experiences.

Part 2 of our series on Google BigQuery and Data Cloud will focus on sharing data from Salesforce to BigQuery (data out).

Explore our new partnership page to discover more about the collaboration between Salesforce and Google.

Further resources

Salesforce and Google announcement

Spring 24 Release Notes on BYOL data share

Trailhead module: Get to Know Data Cloud: Quick Look

Trailhead module: BYOL Data Shares in Data Cloud: Quick Look

About the authors

Gina Nichols is a Director on the Salesforce Data Cloud product team. She has a background as an architect working with some of Salesforce’s enterprise customers in the MarTech space. She is also a co-author of the award-winning, Transform the Consumer Experience Customer 360 Guide, which won an award of Merit at the STC Chicago competition. You can follow her on LinkedIn.

Danielle Larregui is a Senior Developer Advocate at Salesforce focusing on the Data Cloud platform. She enjoys learning about cloud technologies, speaking at and attending tech conferences, and engaging with technical communities. You can follow her on X.

The post Bring Your Google BigQuery Data Lake to Data Cloud: Part 1, Data In appeared first on Salesforce Developers Blog.