As autonomous AI systems gain traction, businesses increasingly depend on networks of interacting agents to drive complex processes and decisions. But with the rise of these AI “teams” come questions about how best to ensure they operate responsibly, ethically, and effectively.

Just as companies govern human teams, autonomous systems also require frameworks to manage risk, compliance, and performance. But networks of interdependent, self-directed agents need more than traditional governance models. We’ll explore three strategies for governing multi-agent systems (MAS) and the challenges and opportunities they bring.

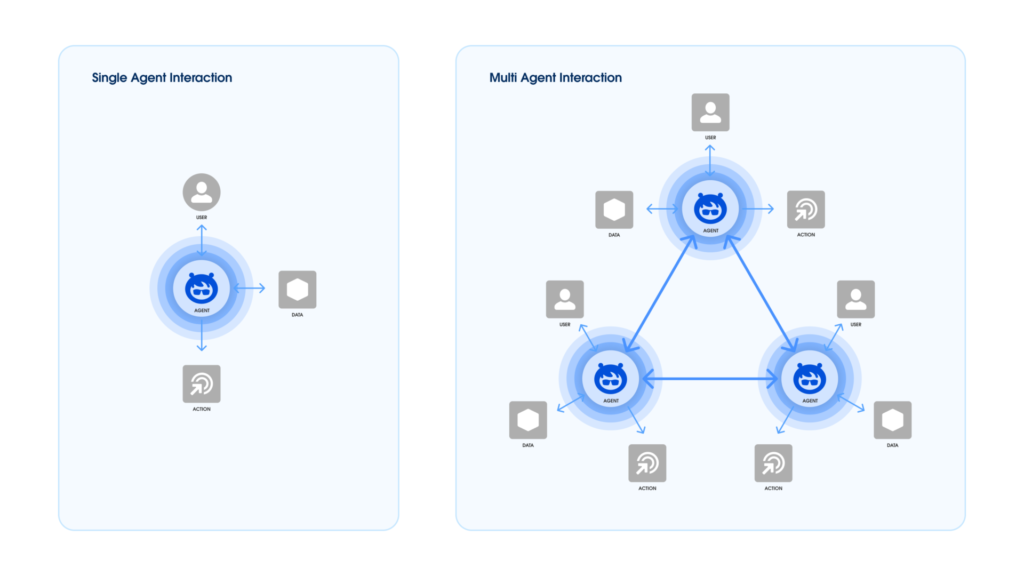

Single agent vs. multi-agent interactions

Agentforce will transform ezCater’s customer experience

Discover how Agentforce will automate customer service to simplify ordering food for the workplace.

1. Extending single-agent governance principles to multi-agent systems

When governing a single autonomous agent, businesses typically implement several guardrails, including:

- Filtering unsafe inputs to minimize harmful interactions

- Human feedback loops, such as reinforcement learning, to align agent behavior with organizational values

- Adversarial testing, like red-teaming, to make agents resilient to real-word challenges

- Output controls to ensure post-processing safeguards before results reach end-users

These measures work well for individual agents but can quickly become strained in a multi-agent setting. In MAS, agents coordinate to achieve goals collaboratively. This often produces new, unpredictable behaviors that are challenging for single-agent governance methods.

It isn’t enough to oversee individual agents in a multi-agent system. We need to pay attention to how they interact with each other. This calls for frameworks that can manage the collective behavior of diverse AI teams.

2. Designing governance for multi-agent complexity

In MAS, complexity grows as agents communicate, collaborate, and make decisions together. This coordination can produce unforeseen effects, or “emergent behaviors,” making it difficult to predict or fully control the system’s outputs. Businesses need governance models that can adapt dynamically to evolving agent interactions.

Here are some methods for managing multi-agent complexity:

- Layered governance approaches: Adopting a sandwich model of pre-filters, real-time monitoring, and post-process checks can provide multiple safety nets, but with adjustments for multi-agent settings.

- Constitutional frameworks: Creating a constitution for MAS can set clear rules and guiding principles for interactions, much like guidelines governing ethical AI. These might include limits on agent autonomy in high-stakes scenarios or rules around collaboration and decision-sharing.

- Automated watchdog agents: Deploying secondary agents can add an extra layer of oversight. These agents can monitor other agent interactions for unusual patterns or harmful content. When risks arise, watchdog agents can escalate issues to a human agent. This will help address risks, while freeing the human agent to focus on more critical tasks.

With these methods, companies can better navigate the inherent complexity of MAS governance. However, effective governance also requires us to think about agent interactions from a social perspective.

Confidently deploy agents faster with Agentforce Activator

Work with the Agentforce experts to customize, build, and deploy autonomous AI agents.

3. Applying social frameworks to govern AI collaboration

In human organizations, governance helps define roles and establish norms, which make up a social framework. Multi-agent systems can benefit from a similar construction. For example, different agents could act as “specialists” or “team members” in a hierarchy or network.

Some ways to structure MAS governance with social frameworks in mind include:

- Role-based governance: Assign governance roles to agents based on their function. This is similar to how human teams have assigned roles, like managers and contributors. In MAS, one agent might oversee quality control while another manages data security within the team.

- Community-inclusive governance: Build feedback loops from end-users and stakeholders into the design process. This can help ensure that agents’ outputs align with user needs and expectations. Agents with the ability to incorporate user perspectives may even improve overall outcomes and adherence to values.

- Hierarchical oversight models: Organize agents by establishing tiers. For example, a higher-level “governor” agent could manage and track the entire workflow for customer service and finance agents. The governor agent might oversee the interactions between service and finance agents. It could then identify areas where further alignment or intervention is necessary to meet company objectives.

Applying social models to MAS governance introduces a human-centered approach, ensuring that multi-agent systems align closely with real-world organizational needs.

Multi-agent platform and governance framing

Building holistic governance for autonomous multi-agent systems

MAS is becoming increasingly central to business processes. So, companies must rethink governance from the ground up. Traditional structures, effective for single agents, need expansion. Only then can they address the unique challenges of AI systems operating as independent, collaborative networks. Governance must adapt to ensure AI aligns with human oversight, values, and organizational goals.

By extending governance principles, designing for complexity, and applying social frameworks, businesses can develop robust governance models for MAS. We are in an era of agent-driven processes. Now is the time to integrate governance as a foundational, holistic system across all levels of AI collaboration. This is an area of active research and implementation focus for both Salesforce AI Research (e.g. with AgentLite multi-agent platform), and our Global AI Practice (with customer-facing workshops on Guardrails & Risk and/or Principles to Practice).

Take the next step with multi-agent systems

Evaluate existing frameworks for scalability and fit with MAS.

- Gather architectural and governance documents

- Revisit rationale and see if it remains relevant

- Understand how this fits into Enterprise AI strategies

Run “pre-mortem” risk assessments to identify key risks and mitigation strategies.

- Gather team members to identity risks and ideate on mitigation

Establish principles-to-practice alignment so that governance clearly reflects organizational values.

- Review organizational principles and values

- Ensure they are considered in the guardrail design and overall architecture

Start small and expand thoughtfully to manage complexity at each growth stage.

- Implement Agentforce with a limited use case

- Examine strengths, weaknesses, and nuances through experimentation

- Update multi-agent governor design accordingly

- Identify the human agent responsible within the process

Governance is key to safely and ethically using multi-agent systems to create autonomous, intelligent systems. Together, we can create a future where intelligent systems transform our world and are built on trust.

Discover Agentforce

Agentforce provides always-on support to employees or customers. Learn how Agentforce can help your company today.