At the Gemini for Work event in September, we showcased how generative AI is transforming the way enterprises work. Across all the customer innovation we saw at the event, one thing was clear – if last year was about gen AI exploration and experimentation, this year is about achieving real-world impact.

Gen AI has the potential to revolutionize how we work, but only if its output is reliable and relevant. Large language models (LLMs), with their knowledge frozen in time during training, often lack access to the latest information and your internal data. In addition, they are by design creative and probabilistic, and therefore prone to hallucinations. And finally, they do not offer built-in source attribution. These limitations hinder their ability to provide up-to-date, contextually relevant and dependable responses.

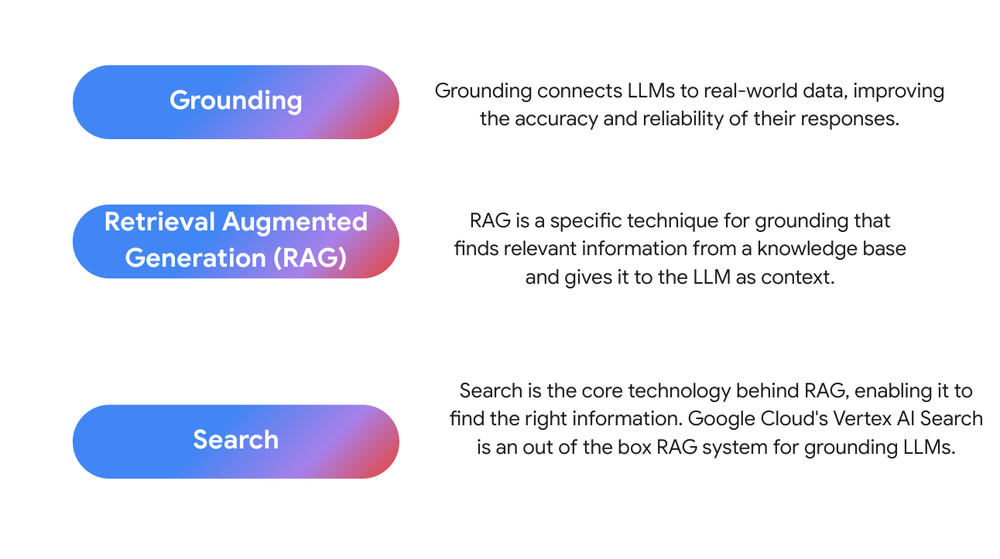

To overcome these challenges, we need to connect LLMs with sources of truth. This is where concepts like grounding, retrieval augmented generation (RAG), and search come into play. Grounding means providing an LLM with external information to root its response in reality, which reduces the chances of it hallucinating or making things up. RAG is a specific technique for grounding that finds relevant information from a knowledge base and gives it to the LLM as context. Search is the core retrieval technology behind RAG, as it’s how the system finds the right information in the knowledge base.

To unlock the true potential of gen AI, businesses need to ground their LLMs in what we at Google call enterprise truth. These are trusted internal data across documents, emails and storage systems, third party applications, and even fresh information from the internet that helps knowledge workers perform their jobs better.

By tapping into your enterprise truth, grounded LLMs can deliver more accurate, contextually relevant, and up-to-date responses, enabling you to use generative AI for real-world impact. This means enhanced customer service with more accurate and personalized support, automated tasks like generating reports and summarizing documents with greater accuracy, deeper insights derived from analyzing multiple data sources to identify trends and opportunities, and ultimately, driving innovation by developing new products and services based on a richer understanding of customer needs and market trends.

Now let’s look at how you can easily overcome these challenges with the latest enhancements from Vertex AI, Google Cloud’s AI platform.

<ListValue: [StructValue([(‘title’, ‘$300 in free credit to try Google Cloud AI and ML’), (‘body’, <wagtail.rich_text.RichText object at 0x3e7114e8c490>), (‘btn_text’, ‘Start building for free’), (‘href’, ‘http://console.cloud.google.com/freetrial?redirectPath=/vertex-ai/’), (‘image’, None)])]>

Tap into the latest knowledge from the internet

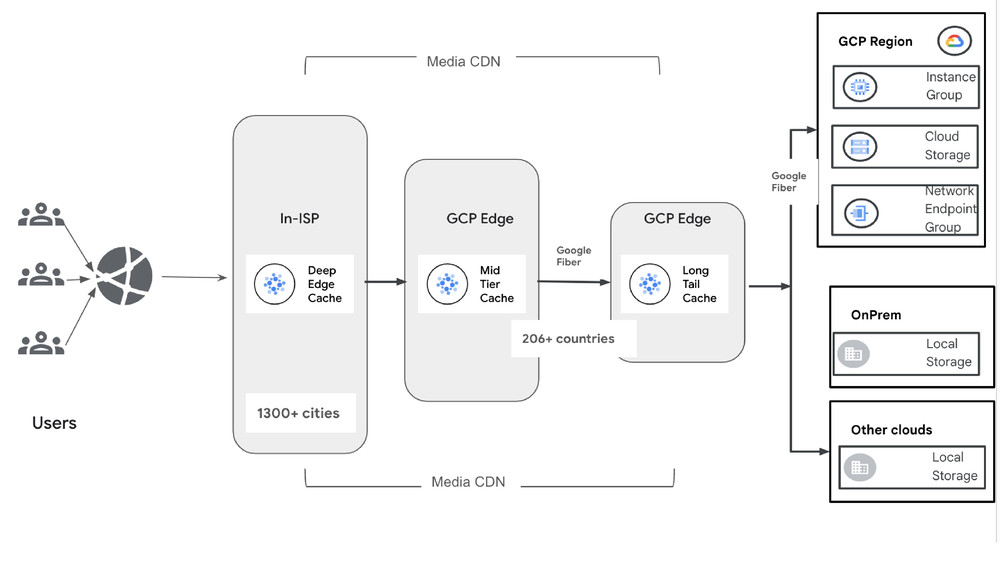

LLMs have a fundamental limitation: their knowledge is anchored to the data they were trained on, which becomes outdated over time. This will impact the quality of response for any question that needs fresh data – the latest news, company 10K results, dates for a sports event or a concert. Grounding with Google Search allows the language model to find fresh information from the internet. It even provides source links so you can fact check or learn more. Grounding with Google Search is offered with our Gemini models out-of-the-box. Just toggle to turn it on, and Gemini will ground the answer using Google Search.

If you’re not sure if your next request requires grounding with Google Search, you can now use the new “dynamic retrieval” feature. Just turn it on and Gemini will interpret your query and predict whether it needs up-to-date information in order to increase the accuracy of the answer. You can set the prediction score threshold on when Gemini will be triggered to use grounding with Google Search.This means you get the best of both worlds: high-quality results when you need them, and lower costs, because Gemini will only tap Google Search when needed for your users’ query.

Connect data across all your enterprise truth

Connecting to fresh facts is just the start. The value for any enterprise is grounding in their proprietary data. RAG is a technique that enhances LLMs by connecting them to non-training data sources, helping them to retrieve information from this data before generating a response. There are several options available for RAG, but many of those don’t work for enterprises because they either lack in quality, reliability, or scalability. The quality of grounded gen AI apps can only be as good as their ability to retrieve your data.

That’s where Vertex AI comes in. Whether you are looking for a simple solution that works out-of-the-box, want to build your own RAG system with APIs, or use highly performative vector embeddings for RAG, Vertex AI offers a comprehensive set of offerings to help meet your needs.

Here’s an easy guide to RAG for the enterprise:

First, use out-of-the-box RAG for most enterprise applications: Vertex AI Search simplifies the end-to-end information discovery process with Google quality RAG aka search. With Vertex AI Search, Google Cloud manages your RAG service and all the various parts of building a RAG system: Optical Character Recognition (OCR), data understanding and annotation, smart chunking, embedding, indexing, storing, query rewriting, spell checking, and so on. Vertex AI search connects to your data including your documents, your websites, your databases, structured data, and also third party apps like JIRA and Slack with built in connectors. The best part is that it can be set up in just a few minutes

Developers can get a taste of grounding with Google Search and enterprise data in the Vertex Grounded Generation playground on Github where you can compare grounded and ungrounded responses to queries side by side.

Then, build your own RAG for specific use cases: If you need to build your own RAG system, Vertex AI offers the various pieces off the shelf as individual APIs for layout parsing, ranking, grounded generation, check grounding, text embeddings and vector search. The layout parser can transform unstructured documents into structured representations and comes with multimodal understanding of charts and figures, which significantly enhances search quality across documents – like PDFs with embedded tables and images, which are challenging for many RAG systems.

Our vector search offering is particularly valuable for enterprises who need custom highly performant embeddings based information retrieval. Vector search can scale to billions of vectors, can find the nearest neighbors in a few milliseconds making it suitable for the needs of the large enterprises. Vector search now offers hybrid search that combines both embeddings and semantic search technologies to ensure the most relevant and accurate responses for your users.

No matter how you build your gen AI apps, thorough evaluation is essential to ensure they meet your specific needs. The gen AI evaluation service in Vertex AI empowers you to go beyond generic benchmarks and define your own evaluation criteria. This means you get a truly accurate picture of how well a model aligns with your unique use case, whether it’s generating creative content, or analyzing documents.

Moving beyond the hype for real world impact

The initial excitement surrounding gen AI has given way to a more pragmatic focus on real-world applications and tangible business value. Grounding is important for achieving this goal, ensuring that your AI models are not just generating text, but generating insights that are grounded in your unique enterprise truth.

Alaska Airlines is developing natural language search, providing travelers with a conversational experience powered by AI that’s akin to interacting with a knowledgeable travel agent. This chatbot aims to streamline travel booking, enhance customer experience, and reinforce brand identity.

Motorola Mobility’s Moto AI leverages Gemini and Imagen to help smartphone users unlock new levels of productivity, creativity, and enjoyment with features such as conversation summaries, notification digests, image creation, and natural language search — all with reliable responses grounded in Google Search.

Cintas is using Vertex AI Search to develop an internal knowledge center for customer service and sales teams to easily find key information.

Workday is using natural language processing in Vertex AI to make data insights more accessible for technical and non-technical users alike.

By embracing grounding, businesses can unlock the full potential of gen AI and lead the way in this transformative era. To learn more, check out my session from Gemini at Work where I cover our grounding offerings in more detail. Download our ebook to see how better search (including grounding) can lead to better business outcomes.

Try out Vertex AI Search today for RAG out-of-the-box to power your gen AI applications with our $1,000 free credit offer.