Routing in a Virtual Private Cloud (VPC) network in Google Cloud is distributed, scalable, and virtual; there aren’t any routing devices attached to the network. Google Cloud routes direct network traffic from a virtual machine to destinations that are internal or external to the VPC network. This approach, which is different from other cloud providers’, enhances network reliability by separating data, control, and management functions in software, simplifying network management and facilitating swift changes in networking infrastructure. This in turn helps reduce the time required for businesses to bring their products and services to market, providing a competitive advantage.

This blog explores the various routing options available from a virtual machine (VM) perspective, enabling you to seamlessly access:

Applications (microservices or VM-based) deployed within the same VPC or a peered VPC.

Managed services offered by Google Cloud, such as BigQuery, Cloud SQL, and Vertex AI Platform.

SaaS solutions hosted on Google Cloud.

Services hosted in on-premises or other cloud hyperscalers.

Public or private services accessible through the internet.

Also, we explore how policy-based routes facilitate traffic inspection within a VPC in Google Cloud.

Route types and next hops

A Google Cloud route consists of three primary elements: a destination prefix in classless inter-domain routing (CIDR) format, a next hop, and a priority. Beyond solely relying on destination IP address, policy-based routes allow for the selection of the next hop based on additional factors, such as protocol and source IP address, which enable inserting appliances such as firewalls into a network traffic path.

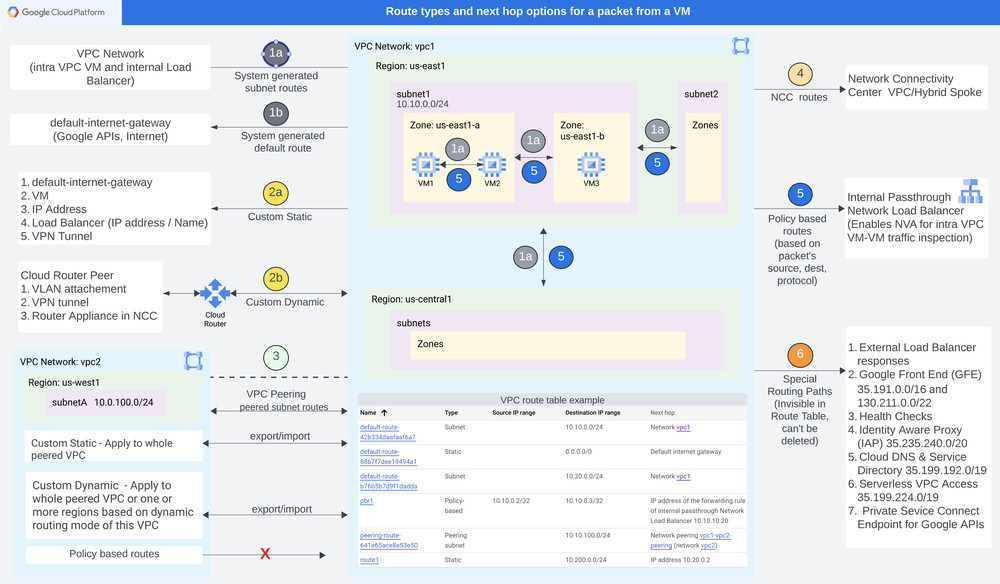

The below diagram presents potential next-hop destinations for a packet originating from a virtual machine within Google Cloud. It includes destinations such as virtual machines, IP addresses, load balancers, and VPN tunnels. It also illustrates the different types of routes available within Google Cloud.

Figure 1: Route Types and hop options for a packet from a VM (click to enlarge)

The following sections describe various types of routes and next-hops for each type of route, and their use cases.

System-generated routes

1a. Subnet routes

A subnet route specifies the path to destinations matching a configured Google Cloud subnet for resources such as VMs, Private Service Connect (PSC) endpoints, and internal load balancers within the VPC network. Its creation and deletion is tied to the lifecycle of the subnet. There are three types of subnet routes: local, peering, and Network Connectivity Center subnet routes.

Imagine you are developing an e-commerce application that utilizes multiple services. Within the same subnet as your client machine, that handles orders, you can conveniently communicate with multiple services:

A Product Catalog Service hosted in the same subnet

A load-balanced Order Validation Service hosted in a separate subnet

A third-party Payment Processing Service located in a different VPC/Organization using a PSC endpoint

A common Shipment Processing Service hosted in a VPC Spoke of a Network Connectivity Center hub, accessible using Private NAT despite overlapping IP addresses between VPC spokes — vpc1 and other VPC networks in the Network Connectivity Center hub

Google Cloud provides out-of-the-box routing for all these scenarios, eliminating the need for any additional custom routes configurations.

1b. System-generated default routes

When you create a VPC network, the system generates a default route for the broadest possible destination of 0.0.0.0/0 and the default-internet-gateway as the next hop. The default-internet-gateway facilitates access to remote IP addresses, Google APIs for the default domains, and the private domains private.googleapis.com and restricted.googleapis.com.

This default route can be deleted or replaced with a custom static route with a more specific destination or a different next hop. For example, to access Google APIs using private domains (private.googleapis.com), create a static route for 199.36.153.8/30 with the next hop of default-internet-gateway. For more information, check out system-generated default routes.

Custom routes

2a. Static routes

You can create a static route for a specified destination CIDR range based on your needs. This route can direct packets to various destinations, including default-internet-gateway, a virtual machine (VM), an IP address, a VPN tunnel, or an internal passthrough load balancer. This lets you control the path of packets and optimize network traffic flow. Note that destination CIDR range can’t conflict with local, peering, and Network Connectivity Center subnet routes. For more information, check out the documentation on static routes.

<ListValue: [StructValue([(‘code’, ‘#Create a custom static routerngcloud compute routes create route1 \rn –network=vpc1 \rn –destination-range=10.200.0.0/24 \rn –priority=100 \rn –next-hop-address=10.20.0.2’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x3e9765f8e490>)])]>

VPC1 route table:

2b. Dynamic routes

Dynamic routes are created, updated, and deleted by Cloud Router based on the messages received through Border Gateway Protocol (BGP). The next hop can be a VLAN attachment, a Cloud VPN tunnel, or a router appliance VM instance in Network Connectivity Center. Depending on the VPC routing mode – regional or global – these dynamic routes are applied to resources in a single region or across all the regions of the VPC network. Refer to Create HA VPN gateways to connect VPC networks to create HA VPN gateways to connect two VPC networks and exchange routes between them dynamically.

Here is an example of a dynamic route received through a BGP session.

VPC network peering routes

When a VPC network is connected with another VPC network through VPC peering, peered subnet routes define paths to reach resources in a peered network. Subnet routes are always exchanged and cannot be disabled, with the exception of IPv4 subnet routes that utilize privately used public IPv4 addresses. Refer to the documentation on route exchange options for various ways to share subnet, static, and dynamic routes with peered networks. Also note that there is a quota for the number of subnet, static, and dynamic routes per network or peering group based on the peering route type.

<ListValue: [StructValue([(‘code’, ‘#VPC peeringrnrnrn#create a network and a subnetworkrngcloud compute networks create vpc2 –subnet-mode=customrngcloud compute networks subnets create subneta –network=vpc2 \rn–range=10.10.100.0/24 \rn–region=us-east1rnrnrn#create peerings between vpc1 and vpc2rngcloud compute networks peerings create vpc1-vpc2-peering –network=vpc1 –peer-network=vpc2rngcloud compute networks peerings create vpc2-vpc1-peering –network=vpc2 –peer-network=vpc1’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x3e9765b45e20>)])]>

VPC1 routes for us-east1 region:

VPC2 routes for us-east1 region:

Network Connectivity Center routes

Network Connectivity Center enables network connectivity among spoke resources that are connected to a central management resource called a hub. Network Connectivity Center supports two types of spokes: VPC and Hybrid (VLAN, VPN tunnel, or router appliance VM). With Network Connectivity Center you can achieve connectivity between VPC networks, on-prem-to-VPC networks (site-to-cloud), or between external sites using Google’s network as wide area network (site-to-site).

Network Connectivity Center subnet route is a subnet IP address range in a VPC spoke connected to Network Connectivity Center hub. Here is an example of VPC route table and Network Connectivity Center hub route table with next-hop for a hub (ncc-hub1) of two VPC spokes (ncc-spoke1 backing vpc1, and ncc-spoke2 backing vpc3). Each VPC spoke’s VPC network route table and the Network Connectivity Center hub route table are automatically updated by Google Cloud.

A VPC spoke can skip exporting any of its subnets due to an overlapping CIDR range with other spokes. This can be done using –exclude-export-ranges option if you’re using the gcloud command.

<ListValue: [StructValue([(‘code’, ‘gcloud compute networks create vpc3 –subnet-mode=customrngcloud compute networks subnets create subnetb –network=vpc3 –range=10.10.50.0/24 –region=us-east1rnrnrn# NCC hub and VPC spokesrngcloud network-connectivity hubs create ncc-hub1rngcloud network-connectivity spokes linked-vpc-network create ncc-spoke1 \rn –hub=ncc-hub1 \rn –vpc-network=vpc1 \rn –globalrngcloud network-connectivity spokes linked-vpc-network create ncc-spoke2 \rn –hub=ncc-hub1 \rn –vpc-network=vpc3 \rn –global’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x3e976830a160>)])]>

VPC route table for a VPC network (vpc1)

VPC route table for a VPC network (vpc3)

Hub route table:

Policy-based routes (PBR)

So far you have seen how a next hop is determined based on the packet’s destination IP address. Beyond that, policy-based routes let you specify a next hop based on the packet’s protocol and source IP address in addition to the destination IP address. In this case, traffic is redirected to an internal passthrough Network Load Balancer which enables the insertion of appliances such as firewalls into the path of network traffic as policy-based routes are evaluated before other routes are evaluated. Note that other than policy-based routes, no other route type is allowed to have a destination that matches or fits within the destination of a local, peering, or Network Connectivity Center subnet route.

In the below example, by default the local subnet route sends the traffic from VM1 to VM2. When you add a policy-based route, VM1 to VM2 traffic is now redirected to an L4 load balancer, which forwards the traffic to a network virtual appliance (NVA) that is implemented with a Managed Instance Group. Within the NVA, the traffic undergoes inspection before being allowed to reach its ultimate destination, VM2.

<ListValue: [StructValue([(‘code’, ‘#Create a Policy based route (PBR) from VM1 to VM2rngcloud network-connectivity policy-based-routes create pbr1 \rn –source-range=10.10.0.2/32 \rn –destination-range=10.10.0.3/32 \rn –ip-protocol=ALL \rn –protocol-version=IPv4 \rn –network=”projects/PROJECT_ID/global/networks/vpc1″ \rn –next-hop-ilb-ip=10.10.10.20 \rn –description=’intra-vpc traffic inspection route’ \rn –priority=500 \rn –tags=client’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x3e976830a490>)])]>

VPC1 route table:

This policy-based route can be expanded to inspect any egress traffic from VMs, with some exceptions. For example, the routing of traffic to Google APIs and services using policy-based routes is not supported by Google Cloud.

Policy-based routes can be applied to all VMs or certain VMs selected by network tag, or to traffic entering the VPC network through Cloud Interconnect VLAN attachments. Policy-based routes are never exchanged through VPC Network Peering.

Special route paths

VPC networks have special routes for certain services that neither appear in your VPC network route table nor can be removed. For example, responses to an external passthrough Network Load Balancer or health checks. However, traffic can be allowed or denied by using VPC firewall rules or Cloud NGFW firewall policies.

Decision table

The table below summarizes possible scenarios for a virtual machine (VM) to access services hosted in Google Cloud, on-premises, or another cloud, along with potential routing options for each scenario.

Scenario

Route Option

Paths for an external load balancer, Google front end, Identity-Aware Proxy (IAP), Cloud DNS, Service Directory, Health Check, Serverless VPC Access, PSC endpoint for global Google APIs

Special route path

Service hosted in the same VPC

System-generated subnet route

Service hosted in another VPC

VPC peered subnet route

Custom dynamic route through VPN

PSC Endpoint

NCC hub subnet route

Google APIs

System generated default or a custom static route to default-internet-gateway

A PSC endpoint

Internet

System generated default or a custom static route to default-internet-gateway

Service hosted in other cloud or on-prem

Custom dynamic route

Intra-VPC traffic inspection

Policy-based route

Conclusion and next steps

In this blog post, you’ve seen the various route types available in Google Cloud for a packet from a VM, helping you develop networking topologies based on your workload needs. For more information, refer to the public documentation at routing in Google Cloud. You might also want to try a policy-based route for traffic inspection, and get hands-on experience with some of the Network Connectivity Center features at Lab: NCC Site to Cloud with SD-WAN Appliance.