Generative AI diffusion models such as Stable Diffusion and Flux produce stunning visuals, empowering creators across various verticals with impressive image generation capabilities. However, generating high-quality images through sophisticated pipelines can be computationally demanding, even with powerful hardware like GPUs and TPUs, impacting both costs and time-to-result.

The key challenge lies in optimizing the entire pipeline to minimize cost and latency without compromising on image quality. This delicate balance is crucial for unlocking the full potential of image generation in real-world applications. For example, before reducing the model size to cut image generation costs, prioritize optimizing the underlying infrastructure and software to ensure peak model performance.

At Google Cloud Consulting, we’ve been assisting customers in navigating these complexities. We understand the importance of optimized image generation pipelines, and in this post, we’ll share three proven strategies to help you achieve both efficiency and cost-effectiveness, to deliver exceptional user experiences.

A comprehensive approach to optimization

We recommend having a comprehensive optimization strategy that addresses all aspects of the pipeline, from hardware to code to overall architecture. One way that we address this at Google Cloud is with AI Hypercomputer, a composable supercomputing architecture that brings together hardware like TPUs & GPUs, along with software and frameworks like Pytorch. Here’s a breakdown of the key areas we focus on:

1. Hardware optimization: Maximizing resource utilization

Image generation pipelines often require GPUs or TPUs for deployment, and optimizing hardware utilization can significantly reduce costs. Since GPUs cannot be allocated fractionally, underutilization is common, especially when scaling workloads, leading to inefficiency and increased cost of operation. To address this, Google Kubernetes Engine (GKE) offers several GPU sharing strategies to improve resource efficiency. Additionally, A3 High VMs with NVIDIA H100 80GB GPUs come in smaller sizes, helping you scale efficiently and control costs.

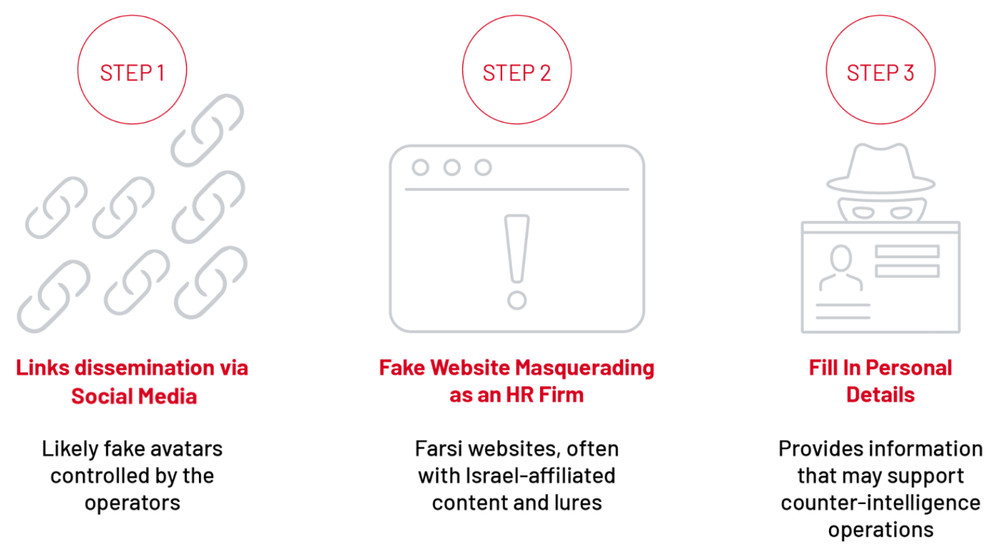

Some key GPU sharing strategies in GKE include:

- Multi-instance GPUs: In this strategy, GKE divides a single GPU in up to 7 slices, providing hardware isolation between the workloads. Each GPU slice has its own resources (compute, memory, and bandwidth) and can be independently assigned to a single container. You can leverage this strategy for inference workloads where resiliency and predictable performance is required. Please review the documented limitations of this approach before implementing and note that currently supported GPU types for multi-instance GPUs on GKE are NVIDIA A100 GPUs (40GB and 80GB), and NVIDIA H100 GPUs (80GB).

- GPU time-sharing: GPU time-sharing lets multiple containers access full GPU capacity using rapid context switching between processes; this is made possible by instruction-level preemption in NVIDIA GPUs. This approach is more suitable for bursty and interactive workloads, or for testing and prototyping purposes where full isolation is not required. With GPU time-sharing, you can optimize GPU cost and utilization by reducing GPU idling time. However, context switching may introduce some latency overhead for individual workloads.

- NVIDIA Multi-Process Service (MPS): NVIDIA MPS is a version of the CUDA API that lets multiple processes/containers run at the same time on the same physical GPU without interference. In this approach, you can run multiple small-to-medium-scale batch-processing workloads on a single GPU and maximize the throughput and hardware utilization. While implementing MPS, you must ensure that workloads using MPS can tolerate memory protection and error containment limitations.

Example illustration of GPU sharing strategies

2. Inference code optimization: Fine-tuning for efficacy

When you have an existing pipeline written natively in PyTorch, you have several options to optimize and reduce the pipeline execution time.

One way is to use PyTorch’s compile method, which enables Just-in-time (JIT) compiling PyTorch code into optimized kernels for faster execution, especially for the forward pass of the decoder step. You can do this through various backend computers such as NVIDIA TensorRT, OpenVINO or IPEX depending on the underlying hardware. You can also use certain compiler backends at training time. A similar JIT compilation capability is also available for other frameworks such as JAX.

Another way to improve code latency is to enable Flash Attention. By enabling the torch.backends.cuda.enable_flash_sdp attribute, PyTorch code natively runs Flash Attention where it is helpful in speeding up a given computation, while automatically selecting another attention mechanism if Flash isn’t optimal based on the inputs.

Additionally, to reduce latency, you also need to minimize data transfers between the GPU and CPU. Operations such as tensor loading, or comparing tensors and Python floats, incur significant overhead due to the data movement. Each time a tensor is compared with a floating-point value, it must be transferred to the CPU, incurring latency. Ideally, you should only load and offload a tensor on and off the GPU once in the entire image generation pipeline; this is especially important for image generation pipelines that utilize several models, where latency cascades with each model that is run. Tools such as PyTorch Profiler help us observe the time and memory utilization of a model.

- aside_block

- <ListValue: [StructValue([(‘title’, ‘$300 in free credit to try Google Cloud AI and ML’), (‘body’, <wagtail.rich_text.RichText object at 0x3eaa8d893bb0>), (‘btn_text’, ‘Start building for free’), (‘href’, ‘http://console.cloud.google.com/freetrial?redirectPath=/vertex-ai/’), (‘image’, None)])]>

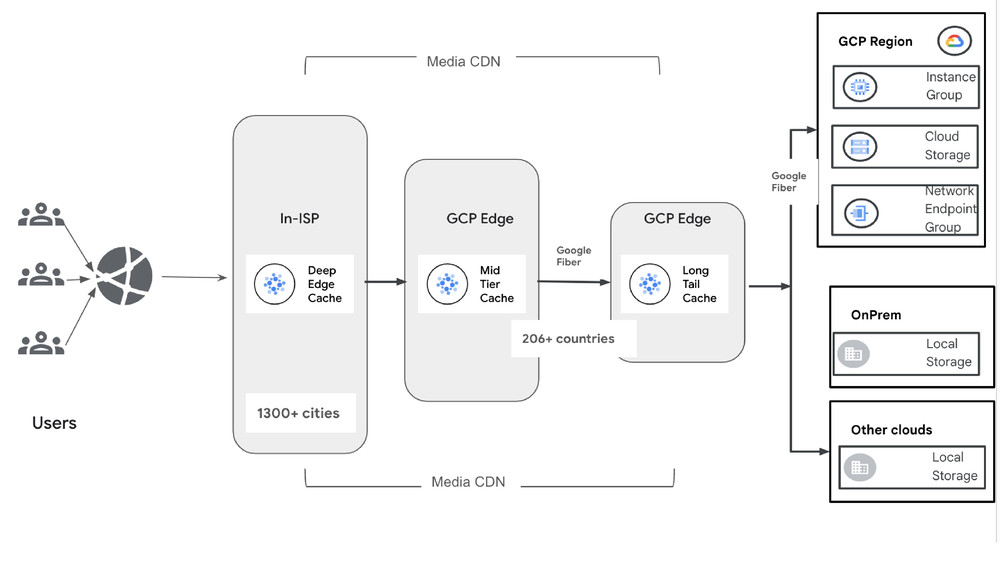

3. Inference pipeline optimization: Streamlining the workflow

While optimizing code can help speed up individual modules within a pipeline, you need to take a look at the big picture. Many multi-step image-generation pipelines cascade multiple models (e.g., samplers, decoders, and image and/or text embedding models) one after the other to generate the final image, often on a single container that has a single GPU attached.

For Diffusion-based pipelines, models such as decoders can have significantly higher computational complexity and hence take longer to execute, especially as compared with embedding models, which are generally faster. That means that certain models can cause a bottleneck in the generation pipeline. To optimize GPU utilization and mitigate this bottleneck, you may consider employing a multi-threaded queue-based approach for efficient task scheduling and execution. This approach enables parallel execution of different pipeline stages on the same GPU, allowing for concurrent processing of several requests. Efficiently distributing tasks among worker threads minimizes GPU idle time and maximizes resource utilization, ultimately leading to higher throughput.

Furthermore, by maintaining tensors on the same GPU throughout the process, you can reduce the overhead of CPU-to-GPU (and vice-versa) data transfers, further enhancing efficiency and reducing costs.

Processing time comparison between a stacked pipeline and a multithreaded pipeline for 2 concurrent requests

Final thoughts

Optimizing image-generation pipelines is a multifaceted process, but the rewards are significant. By adopting a comprehensive approach that includes hardware optimization for maximized resource utilization, code optimization for faster execution, and pipeline optimization for increased throughput, you can achieve substantial performance gains, reduce costs, and deliver exceptional user experiences. In our work with customers, we’ve consistently seen that implementing these optimization strategies can lead to significant cost savings without compromising image quality.

Ready to get started?

At Google Cloud Consulting, we’re dedicated to helping our customers build and deploy high-performing AI solutions. If you’re looking to optimize your image generation pipelines, connect with Google Cloud Consulting today, and we’ll work to help you unlock the full potential of your AI initiatives.

We extend our sincere gratitude to Akhil Sakarwal, Ashish Tendulkar, Abhijat Gupta, and Suraj Kanojia for their invaluable support and guidance throughout the crucial experimentation phase.