Introduction

In the final quarter of 2025, Google Threat Intelligence Group (GTIG) observed threat actors increasingly integrating artificial intelligence (AI) to accelerate the attack lifecycle, achieving productivity gains in reconnaissance, social engineering, and malware development. This report serves as an update to our November 2025 findings regarding the advances in threat actor usage of AI tools.

By identifying these early indicators and offensive proofs of concept, GTIG aims to arm defenders with the intelligence necessary to anticipate the next phase of AI-enabled threats, proactively thwart malicious activity, and continually strengthen both our classifiers and model.

Executive Summary

Google DeepMind and GTIG have identified an increase in model extraction attempts or “distillation attacks,” a method of intellectual property theft that violates Google’s terms of service. Throughout this report we’ve noted steps we’ve taken to thwart malicious activity, including Google detecting, disrupting, and mitigating model extraction activity. While we have not observed direct attacks on frontier models or generative AI products from advanced persistent threat (APT) actors, we observed and mitigated frequent model extraction attacks from private sector entities all over the world and researchers seeking to clone proprietary logic.

For government-backed threat actors, large language models (LLMs) have become essential tools for technical research, targeting, and the rapid generation of nuanced phishing lures. This quarterly report highlights how threat actors from the Democratic People’s Republic of Korea (DPRK), Iran, the People’s Republic of China (PRC), and Russia operationalized AI in late 2025 and improves our understanding of how adversarial misuse of generative AI shows up in campaigns we disrupt in the wild. GTIG has not yet observed APT or information operations (IO) actors achieving breakthrough capabilities that fundamentally alter the threat landscape.

This report specifically examines:

- Model Extraction Attacks: “Distillation attacks” are on the rise as a method for intellectual property theft over the last year.

- AI-Augmented Operations: Real-world case studies demonstrate how groups are streamlining reconnaissance and rapport-building phishing.

- Agentic AI: Threat actors are beginning to show interest in building agentic AI capabilities to support malware and tooling development.

- AI-Integrated Malware: There are new malware families, such as HONESTCUE, that experiment with using Gemini’s application programming interface (API) to generate code that enables download and execution of second-stage malware.

- Underground “Jailbreak” Ecosystem: Malicious services like Xanthorox are emerging in the underground, claiming to be independent models while actually relying on jailbroken commercial APIs and open-source Model Context Protocol (MCP) servers.

At Google, we are committed to developing AI boldly and responsibly, which means taking proactive steps to disrupt malicious activity by disabling the projects and accounts associated with bad actors, while continuously improving our models to make them less susceptible to misuse. We also proactively share industry best practices to arm defenders and enable stronger protections across the ecosystem. Throughout this report, we note steps we’ve taken to thwart malicious activity, including disabling assets and applying intelligence to strengthen both our classifiers and model so it’s protected from misuse moving forward. Additional details on how we’re protecting and defending Gemini can be found in the white paper “Advancing Gemini’s Security Safeguards.”

Direct Model Risks: Disrupting Model Extraction Attacks

As organizations increasingly integrate LLMs into their core operations, the proprietary logic and specialized training of these models have emerged as high-value targets. Historically, adversaries seeking to steal high-tech capabilities used conventional computer-enabled intrusion operations to compromise organizations and steal data containing trade secrets. For many AI technologies where LLMs are offered as services, this approach is no longer required; actors can use legitimate API access to attempt to “clone” select AI model capabilities.

During 2025, we did not observe any direct attacks on frontier models from tracked APT or information operations (IO) actors. However, we did observe model extraction attacks, also known as distillation attacks, on our AI models, to gain insights into a model’s underlying reasoning and chain-of-thought processes.

What Are Model Extraction Attacks?

Model extraction attacks (MEA) occur when an adversary uses legitimate access to systematically probe a mature machine learning model to extract information used to train a new model. Adversaries engaging in MEA use a technique called knowledge distillation (KD) to take information gleaned from one model and transfer the knowledge to another. For this reason, MEA are frequently referred to as “distillation attacks.”

Model extraction and subsequent knowledge distillation enable an attacker to accelerate AI model development quickly and at a significantly lower cost. This activity effectively represents a form of intellectual property (IP) theft.

Knowledge distillation (KD) is a common machine learning technique used to train “student” models from pre-existing “teacher” models. This often involves querying the teacher model for problems in a particular domain, and then performing supervised fine tuning (SFT) on the result or utilizing the result in other model training procedures to produce the student model. There are legitimate uses for distillation, and Google Cloud has existing offerings to perform distillation. However, distillation from Google’s Gemini models without permission is a violation of our Terms of Service, and Google continues to develop techniques to detect and mitigate these attempts.

Figure 1: Illustration of model extraction attacks

Google DeepMind and GTIG identified and disrupted model extraction attacks, specifically attempts at model stealing and capability extraction emanating from researchers and private sector companies globally.

Case Study: Reasoning Trace Coercion

A common target for attackers is Gemini’s exceptional reasoning capability. While internal reasoning traces are typically summarized before being delivered to users, attackers have attempted to coerce the model into outputting full reasoning processes.

One identified attack instructed Gemini that the “… language used in the thinking content must be strictly consistent with the main language of the user input.“

Analysis of this campaign revealed:

|

Scale: Over 100,000 prompts identified. |

Intent: The breadth of questions suggests an attempt to replicate Gemini’s reasoning ability in non-English target languages across a wide variety of tasks. |

Outcome: Google systems recognized this attack in real time and lowered the risk of this particular attack, protecting internal reasoning traces. |

Model Extraction and Distillation Attack Risks

Model extraction and distillation attacks do not typically represent a risk to average users, as they do not threaten the confidentiality, availability, or integrity of AI services. Instead, the risk is concentrated among model developers and service providers.

Organizations that provide AI models as a service should monitor API access for extraction or distillation patterns. For example, a custom model tuned for financial data analysis could be targeted by a commercial competitor seeking to create a derivative product, or a coding model could be targeted by an adversary wishing to replicate capabilities in an environment without guardrails.

|

Mitigations |

|

Model extraction attacks violate Google’s Terms of Service and may be subject to takedowns and legal action. Google continuously detects, disrupts, and mitigates model extraction activity to protect proprietary logic and specialized training data, including with real-time proactive defenses that can degrade student model performance. We are sharing a broad view of this activity to help raise awareness of the issue for organizations that build or operate their own custom models. |

Highlights of AI-Augmented Adversary Activity

A consistent finding over the past year is that government-backed attackers misuse Gemini for coding and scripting tasks, gathering information about potential targets, researching publicly known vulnerabilities, and enabling post-compromise activities. In Q4 2025, GTIG’s understanding of how these efforts translate into real-world operations improved as we saw direct and indirect links between threat actor misuse of Gemini and activity in the wild.

Figure 2: Threat actors are leveraging AI across all stages of the attack lifecycle

Supporting Reconnaissance and Target Development

APT actors used Gemini to support several phases of the attack lifecycle, including a focus on reconnaissance and target development to facilitate initial compromise. This activity underscores a shift toward AI-augmented phishing enablement, where the speed and accuracy of LLMs can bypass the manual labor traditionally required for victim profiling. Beyond generating content for phishing lures, LLMs can serve as a strategic force multiplier during the reconnaissance phase of an attack, allowing threat actors to rapidly synthesize open-source intelligence (OSINT) to profile high-value targets, identify key decision-makers within defense sectors, and map organizational hierarchies. By integrating these tools into their workflow, threat actors can move from initial reconnaissance to active targeting at a faster pace and broader scale.

-

UNC6418, an unattributed threat actor, misused Gemini to conduct targeted intelligence gathering, specifically seeking out sensitive account credentials and email addresses. Shortly after, GTIG observed the threat actor target all these accounts in a phishing campaign focused on Ukraine and the defense sector. Google has taken action against this actor by disabling the assets associated with this activity.

-

Temp.HEX, a PRC-based threat actor, misused Gemini and other AI tools to compile detailed information on specific individuals, including targets in Pakistan, and to collect operational and structural data on separatist organizations in various countries. While we did not see direct targeting as a result of this research, shortly after the threat actor included similar targets in Pakistan in their campaign. Google has taken action against this actor by disabling the assets associated with this activity.

Phishing Augmentation

Defenders and targets have long relied on indicators such as poor grammar, awkward syntax, or lack of cultural context to help identify phishing attempts. Increasingly, threat actors now leverage LLMs to generate hyper-personalized, culturally nuanced lures that can mirror the professional tone of a target organization or local language.

This capability extends beyond simple email generation into “rapport-building phishing,” where models are used to maintain multi-turn, believable conversations with victims to build trust before a malicious payload is ever delivered. By lowering the barrier to entry for non-native speakers and automating the creation of high-quality content, adversaries can largely erase those “tells” and improve the effectiveness of their social engineering efforts.

-

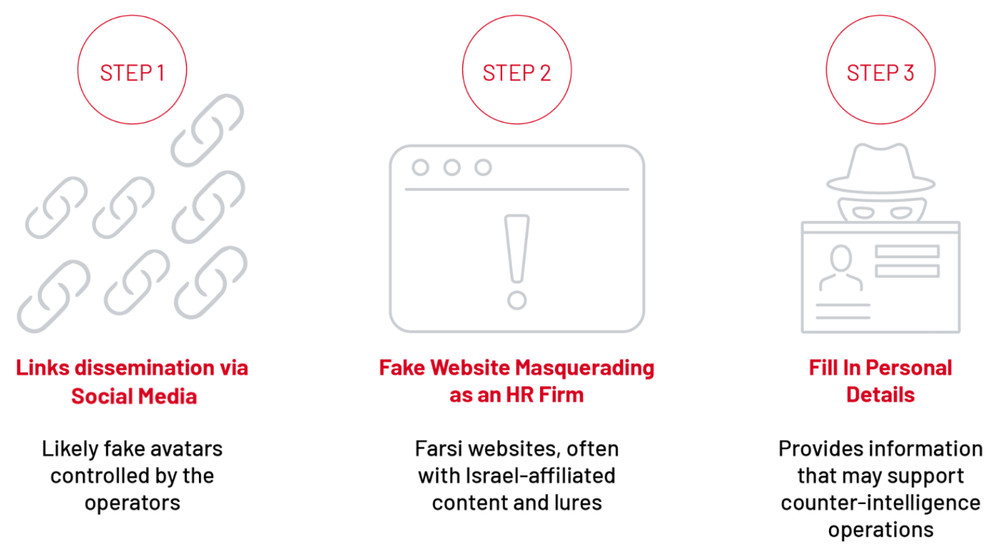

The Iranian government-backed actor APT42 leveraged generative AI models, including Gemini, to significantly augment reconnaissance and targeted social engineering. APT42 misuses Gemini to search for official emails for specific entities and conduct reconnaissance on potential business partners to establish a credible pretext for an approach. This includes attempts to enumerate the official email addresses for specific entities and to conduct research to establish a credible pretext for an approach. By providing Gemini with the biography of a target, APT42 misused Gemini to craft a good persona or scenario to get engagement from the target. As with many threat actors tracked by GTIG, APT42 uses Gemini to translate into and out of local languages, as well as to better understand non-native-language phrases and references. Google has taken action against this actor by disabling the assets associated with this activity.

-

The North Korean government-backed actor UNC2970 has consistently focused on defense targeting and impersonating corporate recruiters in their campaigns. The group used Gemini to synthesize OSINT and profile high-value targets to support campaign planning and reconnaissance. This actor’s target profiling included searching for information on major cybersecurity and defense companies and mapping specific technical job roles and salary information. This activity blurs the distinction between routine professional research and malicious reconnaissance, as the actor gathers the necessary components to create tailored, high-fidelity phishing personas and identify potential soft targets for initial compromise. Google has taken action against this actor by disabling the assets associated with this activity.

Threat Actors Continue to Use AI to Support Coding and Tooling Development

State-sponsored actors continue to misuse Gemini to enhance all stages of their operations, from reconnaissance and phishing lure creation to command-and-control (C2 or C&C) development and data exfiltration. We have also observed activity demonstrating an interest in using agentic AI capabilities to support campaigns, such as prompting Gemini with an expert cybersecurity persona, or attempting to create an AI-integrated code auditing capability.

|

Agentic AI refers to artificial intelligence systems engineered to operate with a high degree of autonomy, capable of reasoning through complex tasks, making independent decisions, and executing multi-step actions without constant human oversight. Cyber criminals, nation-state actors, and hacktivist groups are showing a growing interest in leveraging agentic AI for malicious purposes, including automating spear-phishing attacks, developing sophisticated malware, and conducting disruptive campaigns. While we have detected a tool, AutoGPT, advertising the alleged generation and maintenance of autonomous agents, we have not yet seen evidence of these capabilities being used in the wild. However, we do anticipate that more tools and services claiming to contain agentic AI capabilities will likely enter the underground market. |

APT31 employed a highly structured approach by prompting Gemini with an expert cybersecurity persona to automate the analysis of vulnerabilities and generate targeted testing plans. The PRC-based threat actor fabricated a scenario, in one case trialing Hexstrike MCP tooling, and directing the model to analyze remote code execution (RCE), web application firewall (WAF) bypass techniques, and SQL injection test results against specific US-based targets. This automated intelligence gathering to identify technological vulnerabilities and organizational defense weaknesses. This activity explicitly blurs the line between a routine security assessment query and a targeted malicious reconnaissance operation. Google has taken action against this actor by disabling the assets associated with this activity.

|

” |

|

Threat actors fabricated scenarios, potentially in order to generate penetration test prompts. |

Figure 3: Sample of APT31 prompting

Figure 4: APT31’s misuse of Gemini mapped across the attack lifecycle

UNC795, a PRC-based actor, relied heavily on Gemini throughout their entire attack lifecycle. GTIG observed the group consistently engaging with Gemini multiple days a week to troubleshoot their code, conduct research, and generate technical capabilities for their intrusion activity. The threat actor’s activity triggered safety systems, and Gemini did not comply with the actor’s attempts to create policy-violating capabilities.

The group also employed Gemini to create an AI-integrated code auditing capability, likely demonstrating an interest in agentic AI utilities to support their intrusion activity. Google has taken action against this actor by disabling the assets associated with this activity.

Figure 5: UNC795’s misuse of Gemini mapped across the attack lifecycle

We observed activity likely associated with the PRC-based threat actor APT41, which leveraged Gemini to accelerate the development and deployment of malicious tooling, including for knowledge synthesis, real-time troubleshooting, and code translation. In particular, multiple times the actor gave Gemini open-source tool README pages and asked for explanations and use case examples for specific tools. Google has taken action against this actor by disabling the assets associated with this activity.

Figure 6: APT41’s misuse of Gemini mapped across the attack lifecycle

In addition to leveraging Gemini for the aforementioned social engineering campaigns, the Iranian threat actor APT42 uses Gemini as an engineering platform to accelerate the development of specialized malicious tools. The threat actor is actively engaged in developing new malware and offensive tooling, leveraging Gemini for debugging, code generation, and researching exploitation techniques. Google has taken action against this actor by disabling the assets associated with this activity.

Figure 7: APT42’s misuse of Gemini mapped across the attack lifecycle

|

Mitigations |

|

These activities triggered Gemini’s safety responses, and Google took additional, broader action to disrupt the threat actors’ campaigns based on their operational security failures. Additionally, we’ve taken action against these actors by disabling the assets associated with this activity and making updates to prevent further misuse. Google DeepMind has used these insights to strengthen both classifiers and the model itself, enabling it to refuse to assist with these types of attacks moving forward. |

Using Gemini to Support Information Operations

GTIG continues to observe IO actors use Gemini for productivity gains (research, content creation, localization, etc.), which aligns with their previous use of Gemini. We have identified Gemini activity that indicates threat actors are soliciting the tool to help create articles, generate assets, and aid them in coding. However, we have not identified this generated content in the wild. None of these attempts have created breakthrough capabilities for IO campaigns. Threat actors from China, Iran, Russia, and Saudi Arabia are producing political satire and propaganda to advance specific ideas across both digital platforms and physical media, such as printed posters.

|

Mitigations |

|

For observed IO campaigns, we did not see evidence of successful automation or any breakthrough capabilities. These activities are similar to our findings from January 2025 that detailed how bad actors are leveraging Gemini for productivity gains, rather than novel capabilities. We took action against IO actors by disabling the assets associated with these actors’ activity, and Google DeepMind used these insights to further strengthen our protections against such misuse. Observations have been used to strengthen both classifiers and the model itself, enabling it to refuse to assist with this type of misuse moving forward. |

Continuing Experimentation with AI-Enabled Malware

GTIG continued to observe threat actors experiment with AI to implement novel capabilities in malware families in late 2025. While we have not encountered experimental AI-enabled techniques resulting in revolutionary paradigm shifts in the threat landscape, these proof-of-concept malware families are early indicators of how threat actors can implement AI techniques as part of future operations. We expect this exploratory testing will increase in the future.

In addition to continued experimentation with novel capabilities, throughout late 2025 GTIG observed threat actors integrating conventional AI-generated capabilities into their intrusion operations such as the COINBAIT phishing kit. We expect threat actors will continue to incorporate AI throughout the attack lifecycle including: supporting malware creation, improving pre-existing malware, researching vulnerabilities, conducting reconnaissance, and/or generating lure content.

Outsourcing Functionality: HONESTCUE

In September 2025, GTIG observed malware samples, which we track as HONESTCUE, leveraging Gemini’s API to outsource functionality generation. Our examination of HONESTCUE malware samples indicates the adversary’s incorporation of AI is likely designed to support a multi-layered approach to obfuscation by undermining traditional network-based detection and static analysis.

HONESTCUE is a downloader and launcher framework that sends a prompt via Google Gemini’s API and receives C# source code as the response. Notably, HONESTCUE shares capabilities similar to PROMPTFLUX’s “just-in-time” (JIT) technique that we previously observed; however, rather than leveraging an LLM to update itself, HONESTCUE calls the Gemini API to generate code that operates the “stage two” functionality, which downloads and executes another piece of malware. Additionally, the fileless secondary stage of HONESTCUE takes the C# source code received from the Gemini API and uses the legitimate .NET CSharpCodeProvider framework to compile and execute the payload directly in memory. This approach leaves no payload artifacts on the disk. We have also observed the threat actor use content delivery networks (CDNs) like Discord CDN to host the final payloads.

Figure 8: HONESTCUE malware

We have not associated this malware with any existing clusters of threat activity; however, we suspect this malware is being developed by developers who possess a modicum of technical expertise. Specifically, the small iterative changes across many samples as well as the single VirusTotal submitter, potentially testing antivirus capabilities, suggests a singular actor or small group. Additionally, the use of Discord to test payload delivery and the submission of Discord Bots indicates an actor with limited technical sophistication. The consistency and clarity of the architecture coupled with the iterative progression of the examined malware samples strongly suggest this is a single actor or small group likely in the proof-of-concept stage of implementation.

HONESTCUE’s use of a hard-coded prompt is not malicious in its own right, and, devoid of any context related to malware, it is unlikely that the prompt would be considered “malicious.” Outsourcing a facet of malware functionality and leveraging an LLM to develop seemingly innocuous code that fits into a bigger, malicious construct demonstrates how threat actors will likely embrace AI applications to augment their campaigns while bypassing security guardrails.

|

|

Figure 9: Example of a hard-coded prompt

|

|

|

|

Figure 11: Example of a hard-coded prompt

AI-Generated Phishing Kit: COINBAIT

In November 2025, GTIG identified COINBAIT, a phishing kit, whose construction was likely accelerated by AI code generation tools, masquerading as a major cryptocurrency exchange for credential harvesting. Based on direct infrastructure overlaps and the use of attributed domains, we assess with high confidence that a portion of this activity overlaps with UNC5356, a financially motivated threat cluster that makes use of SMS- and phone-based phishing campaigns to target clients of financial organizations, cryptocurrency-related companies, and various other popular businesses and services.

An examination of the malware samples indicates the kit was built using the AI-powered platform Lovable AI based on the use of the lovableSupabase client and lovable.app for image hosting.

-

By hosting content on a legitimate, trusted service, the actor increases the likelihood of bypassing network security filters that would otherwise block the suspicious primary domain.

-

The phishing kit was wrapped in a full React Single-Page Application (SPA) with complex state management and routing. This complexity is indicative of code generated from high-level prompts (e.g., “Create a Coinbase-style UI for wallet recovery”) using a framework like Lovable AI.

-

Another key indicator of LLM use is the presence of verbose, developer-oriented logging messages directly within the malware’s source code. These messages—consistently prefixed with “? Analytics:”—provide a real-time trace of the kit’s malicious tracking and data exfiltration activities and serve as a unique fingerprint for this code family.

|

Phase |

Log Message Examples |

|

Initialization |

? Analytics: Initializing… |

|

? Analytics: Session created in database: |

|

|

Credential Capture |

? Analytics: Tracking password attempt: |

|

? Analytics: Password attempt tracked to database: |

|

|

Admin Panel Fetching |

? RecoveryPhrasesCard: Fetching recovery phrases directly from database… |

|

Routing/Access Control |

? RouteGuard: Admin redirected session, allowing free access to |

|

? RouteGuard: Session approved by admin, allowing free access to |

|

|

Error Handling |

? Analytics: Database error for password attempt: |

Table 2: Example console.log messages extracted from COINBAIT source code

We also observed the group employ infrastructure and evasion tactics for their operations, including proxying phishing domains through Cloudflare to obscure the attacker IP addresses and hotlinking image assets in phishing pages directly from Lovable AI.

The introduction of the COINBAIT phishing kit would represent an evolution in UNC5356’s tooling, demonstrating a shift toward modern web frameworks and legitimate cloud services to enhance the sophistication and scalability of their social engineering campaigns. However, there is at least some evidence to suggest that COINBAIT may be a service provided to multiple disparate threat actors.

|

Mitigations |

|

Organizations should strongly consider implementing network detection rules to alert on traffic to backend-as-a-service (BaaS) platforms like Supabase that originate from uncategorized or newly registered domains. Additionally, organizations should consider enhancing security awareness training to warn users against entering sensitive data into website forms. This includes passwords, multifactor authentication (MFA) backup codes, and account recovery keys. |

Cyber Crime Use of AI Tooling

In addition to misusing existing AI-enabled tools and services across the industry, there is a growing interest and marketplace for AI tools and services purpose-built to enable illicit activities. Tools and services offered via underground forums can enable low-level actors to augment the frequency, scope, efficacy, and complexity of their intrusions despite their limited technical acumen and financial resources. While financially motivated threat actors continue experimenting, they have not yet made breakthroughs in developing AI tooling.

Threat Actors Leveraging AI Services for Social Engineering in ‘ClickFix’ Campaigns

While not a new malware technique, GTIG observed instances in which threat actors abused the public’s trust in generative AI services to attempt to deliver malware. GTIG identified a novel campaign where threat actors are leveraging the public sharing feature of generative AI services, including Gemini, to host deceptive social engineering content. This activity, first observed in early December 2025, attempts to trick users into installing malware via the well-established “ClickFix” technique. This ClickFix technique is used to socially engineer users to copy and paste a malicious command into the command terminal.

The threat actors were able to bypass safety guardrails to stage malicious instructions on how to perform a variety of tasks on macOS, ultimately distributing variants of ATOMIC, an information stealer that targets the macOS environment and has the ability to collect browser data, cryptocurrency wallets, system information, and files in the Desktop and Documents folders. The threat actors behind this campaign have used a wide range of AI chat platforms to host their malicious instructions, including ChatGPT, CoPilot, DeepSeek, Gemini, and Grok.

The campaign’s objective is to lure users, primarily those on Windows and macOS systems, into manually executing malicious commands. The attack chain operates as follows:

-

A threat actor first crafts a malicious command line that, if copied and pasted by a victim, would infect them with malware.

-

Next, the threat actor manipulates the AI to create realistic-looking instructions to fix a common computer issue (e.g., clearing disk space or installing software), but gives the malicious command line to the AI as the solution.

-

Gemini and other AI tools allow a user to create a shareable link to specific chat transcripts so a specific AI response can be shared with others. The attacker now has a link to a malicious ClickFix landing page hosted on the AI service’s infrastructure.

-

The attacker purchases malicious advertisements or otherwise directs unsuspecting victims to the publicly shared chat transcript.

-

The victim is fooled by the AI chat transcript and follows the instructions to copy a seemingly legitimate command-line script and paste it directly into their system’s terminal. This command will download and install malware. Since the action is user initiated and uses built-in system commands, it may be harder for security software to detect and block.

Figure 12: ClickFix attack chain

There were different lures generated for Windows and MacOS, and the use of malicious advertising techniques for payload distribution suggests the targeting is likely fairly broad and opportunistic.

This approach allows threat actors to leverage trusted domains to host their initial stage of instruction, relying on social engineering to carry out the final, highly destructive step of execution. While a widely used approach, this marks the first time GTIG observed the public sharing feature of AI services being abused as trusted domains.

|

Mitigations |

|

In partnership with Ads and Safe Browsing, GTIG is taking actions to both block the malicious content and restrict the ability to promote these types of AI-generated responses. |

Observations from the Underground Marketplace: Threat Actors Abusing AI API Keys

While legitimate AI services remain popular tools for threat actors, there is an enduring market for AI services specifically designed to support malicious activity. Current observations of English- and Russian-language underground forums indicates there is a persistent appetite for AI-enabled tools and services, which aligns with our previous assessment of these platforms.

However, threat actors struggle to develop custom models and instead rely on mature models such as Gemini. For example, “Xanthorox” is an underground toolkit that advertises itself as a custom AI for cyber offensive purposes, such as autonomous code generation of malware and development of phishing campaigns. The model was advertised as a “bespoke, privacy preserving self-hosted AI” designed to autonomously generate malware, ransomware, and phishing content. However, our investigation revealed that Xanthorox is not a custom AI but actually powered by several third-party and commercial AI products, including Gemini.

This setup leverages a key abuse vector: the integration of multiple open-source AI products—specifically Crush, Hexstrike AI, LibreChat-AI, and Open WebUI—opportunistically leveraged via Model Context Protocol (MCP) servers to build an agentic AI service upon commercial models.

In order to misuse LLMs services for malicious operations in a scalable way, threat actors need API keys and resources that enable LLM integrations. This creates a hijacking risk for organizations with substantial cloud resources and AI resources.

In addition, vulnerable open-source AI tools are commonly exploited to steal AI API keys from users, thus facilitating a thriving black market for unauthorized API resale and key hijacking, enabling widespread abuse, and incurring costs for the affected users. For example, the One API and New API platform, popular with users facing country-level censorship, are regularly harvested for API keys by attackers, exploiting publicly known vulnerabilities such as default credentials, insecure authentication, lack of rate limiting, XSS flaws, and API key exposure via insecure API endpoints.

|

Mitigations |

|

The activity was identified and successfully mitigated. Google Trust & Safety took action to disable and mitigate all identified accounts and AI Studio projects associated with Xanthorox. These observations also underscore a broader security risk where vulnerable open-source AI tools are actively exploited to steal users’ AI API keys, thus facilitating a black market for unauthorized API resale and key hijacking, enabling widespread abuse, and incurring costs for the affected users. |

Building AI Safely and Responsibly

We believe our approach to AI must be both bold and responsible. That means developing AI in a way that maximizes the positive benefits to society while addressing the challenges. Guided by our AI Principles, Google designs AI systems with robust security measures and strong safety guardrails, and we continuously test the security and safety of our models to improve them.

Our policy guidelines and prohibited use policies prioritize safety and responsible use of Google’s generative AI tools. Google’s policy development process includes identifying emerging trends, thinking end-to-end, and designing for safety. We continuously enhance safeguards in our products to offer scaled protections to users across the globe.

At Google, we leverage threat intelligence to disrupt adversary operations. We investigate abuse of our products, services, users, and platforms, including malicious cyber activities by government-backed threat actors, and work with law enforcement when appropriate. Moreover, our learnings from countering malicious activities are fed back into our product development to improve safety and security for our AI models. These changes, which can be made to both our classifiers and at the model level, are essential to maintaining agility in our defenses and preventing further misuse.

Google DeepMind also develops threat models for generative AI to identify potential vulnerabilities and creates new evaluation and training techniques to address misuse. In conjunction with this research, Google DeepMind has shared how they’re actively deploying defenses in AI systems, along with measurement and monitoring tools, including a robust evaluation framework that can automatically red team an AI vulnerability to indirect prompt injection attacks.

Our AI development and Trust & Safety teams also work closely with our threat intelligence, security, and modelling teams to stem misuse.

The potential of AI, especially generative AI, is immense. As innovation moves forward, the industry needs security standards for building and deploying AI responsibly. That’s why we introduced the Secure AI Framework (SAIF), a conceptual framework to secure AI systems. We’ve shared a comprehensive toolkit for developers with resources and guidance for designing, building, and evaluating AI models responsibly. We’ve also shared best practices for implementing safeguards, evaluating model safety, red teaming to test and secure AI systems, and our comprehensive prompt injection approach.

Working closely with industry partners is crucial to building stronger protections for all of our users. To that end, we’re fortunate to have strong collaborative partnerships with numerous researchers, and we appreciate the work of these researchers and others in the community to help us red team and refine our defenses.

Google also continuously invests in AI research, helping to ensure AI is built responsibly, and that we’re leveraging its potential to automatically find risks. Last year, we introduced Big Sleep, an AI agent developed by Google DeepMind and Google Project Zero, that actively searches and finds unknown security vulnerabilities in software. Big Sleep has since found its first real-world security vulnerability and assisted in finding a vulnerability that was imminently going to be used by threat actors, which GTIG was able to cut off beforehand. We’re also experimenting with AI to not only find vulnerabilities, but also patch them. We recently introduced CodeMender, an experimental AI-powered agent using the advanced reasoning capabilities of our Gemini models to automatically fix critical code vulnerabilities.

Indicators of Compromise (IOCs)

To assist the wider community in hunting and identifying activity outlined in this blog post, we have included IOCs in a free GTI Collection for registered users.

About the Authors

Google Threat Intelligence Group focuses on identifying, analyzing, mitigating, and eliminating entire classes of cyber threats against Alphabet, our users, and our customers. Our work includes countering threats from government-backed actors, targeted zero-day exploits, coordinated information operations (IO), and serious cyber crime networks. We apply our intelligence to improve Google’s defenses and protect our users and customers.