As developers gear up for re:Invent 2024, they again face the unique challenges of physical racing. What are the obstacles? Let’s have a look.

In this blog post, I will look at what makes physical AWS DeepRacer racing—a real car on a real track—different to racing in the virtual world—a model in a simulated 3D environment. I will cover the basics, the differences in virtual compared to physical, and what steps I have taken to get a deeper understanding of the challenge.

The AWS DeepRacer League is wrapping up. In two days, 32 racers will face off in Las Vegas for one last time. This year, the qualification has been all-virtual, so the transition from virtual to physical racing will be a challenge.

The basics

AWS DeepRacer relies on the racer training a model within the simulator, a 3D environment built around ROS and Gazebo, originally built on AWS RoboMaker.

The trained model is subsequently used for either virtual or physical races. The model comprises a convolutional neural network (CNN) and an action space translating class labels into speed and throttle movement. In the basic scenario involving a single camera, a 160 x 120 pixels, 8-bit grayscale image (similar to the following figure) is captured 15 times per second, passed through the neural network, and the action with the highest weight (probability) is executed.

The small piece of AI magic is that during model evaluation (racing) there’s no context; each image is processed independently of the image before it, and without knowledge of the state of the car itself. If you process the images in reverse order the results remain the same!

Virtual compared to physical

The virtual worlds are 3D worlds created in Gazebo, and the software is written in Python and C++ using ROS as the framework. As shown in the following image, the 3D simulation is fairly flat, with basic textures and surfaces. There is little or no reflections or shine, and the environment is as visually clean as you make it. Input images are captured 15 times per second.

Within this world a small car is simulated. Compared to a real car, the model is very basic and lacks quite a few of the things that make a real car work: There is no suspension, the tires are rigid cylinders, there is no Ackermann steering, and there are no differentials. It’s almost surprising that this car can drive at all. On the positive side the camera is perfect; irrespective of lighting conditions you get crisp clear pictures with no motion blur.

A typical virtual car drives at speeds between 0.5 and 4.0 meters per second, depending on the shape of the track. If you go too fast, it will often oversteer and spin out of the turn because of the relatively low grip.

In contrast, the real world is less perfect—simulation-to-real gap #1 is around visual noise created by light, reflections (if track is printed on reflective material), and background noise (such as if the barriers around the track are too low, and the car sees people and objects in the back). Input images are captured 30 times per second.

The car itself—based on the readily available WLToys A979—has all the things the model car doesn’t: proper tires, suspension, and differential. One problem is that the car is heavy—around 1.5 kg—and the placement of some components causes the center of gravity to be very high. This causes simulation-to-real gap #2: Roll and pitch during corners at high speeds cause the camera to rotate, confusing the neural network as the horizon moves.

Gap #3 comes from motion blur when the light is too dim; the blur can cause the dashed centerline to look like a solid line, making it hard to distinguish the centerline from the solid inner and outer lines, as shown in the following figure.

The steering geometry, the differentials, the lack of engineering precision of the A979, and the corresponding difficulty in calibrating it, causes gap #4. Even if the model wants to go straight, the car still pulls left or right, needing constant correction to stay on track. This is most noticeable when the car is unable to drive down the straights in a straight line.

The original AWS DeepRacer, without modifications, has a smaller speed range of about 2 meters per second. It has a better grip but suffers from the previously mentioned roll movements. If you go too fast, it will understeer and potentially roll over. Since 2023, the AWS pit-crews operate their fleets of AWS DeepRacers with shock spacers to stiffen the suspension, reduce the roll, and increase the max effective speed.

Four questions

Looking at the sim-to-real gaps there are four questions that we want to explore:

How can we train the model to better handle the real world? This includes altering the simulator to close some of the gaps, combined with adapting reward function, action space, and training methodology to make better use of this simulator.

How can we better evaluate what the car does, and why? In the virtual world, we can perform log analysis to investigate; in the real world this has not yet been possible.

How can we evaluate our newly trained models? A standard AWS DeepRacer track, with its size of 8 meters x 6 meters, is prohibitively large. Is it possible to downscale the track to fit in a home?

Will a modified car perform better? Upgrade my AWS DeepRacer with better shocks? Add ball bearings and shims to improve steering precision? Or build a new lighter car based on a Raspberry Pi?

Solutions

To answer these questions, some solutions are required to support the experiments. The following assumes that you’re using Deepracer-for-Cloud to run the training locally or in an Amazon Elastic Compute Cloud (Amazon EC2) instance. We won’t go into the details but provide references that will enable you to try things out on your own.

Customized simulator

The first thing to look at is how you can alter the simulator. The simulator code is available, and modifying it doesn’t require too many skills. You can alter the car and the physics of the world or adjust the visual environment.

Change the environment

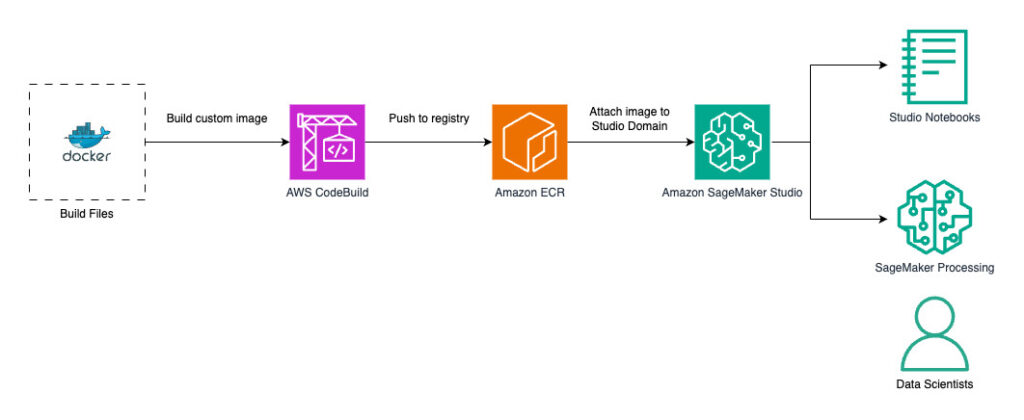

Changing the environments means altering the 3D world. This can be done by altering the features in a pre-existing track by adding or removing track parts (such as lines), changing lighting, adding background features (such as walls or buildings), swapping out textures, and so on. Making changes to the world will require building a new Docker image, which can take quite some time, but there are ways to speed that up. Going a step further, it’s also possible to make the world programmatically (command line or code) alterable during run-time.

The starting point are the track COLLADA (.dae) files found in the meshes folder. You can import it into Blender (shown in the following figure), make your changes, and export the file again. Note that lights and camera positions from Blender aren’t considered by Gazebo. To alter the lighting conditions, you will have to alter the .world file in worlds—the files are XML files in sdformat.

See Custom Tracks for some examples of tuned tracks.

Car and physics

The competition cars owned by AWS can’t be altered, so the objective of tuning the car in the simulator is to make it behave in ways more similar to the real one. Trained neural networks have an embedded expectation of what will happen next; which means that the simulated car learned that by taking a specific action, it would get a turn of a given radius. If the simulator car steers more or less than the physical one in a given situation, the outcome becomes unpredictable.

Lack of Ackermann steering, no differentials, but wheels that can deflect up to 30 degrees—real wheels only go to a bit more than 20 degrees outwards and less than that inwards. My experience is that the real car, surprisingly enough, still has a shorter turning radius than the virtual one.

The car models are found in the urdf folder. There are three different cars, relating to the different versions of physics, which you configure in your actions space (model_metadata.json). Today, only the deepracer (v3 and v4 physics) and deepracer_kinematics (v5 physics) models are relevant. There are variant models for single camera and for stereo camera, both with and without the LIDAR.

Each physics version is different; the big question is what impact, if any, each version has on the behavior of the physical car.

Version 3: Steering and throttle is managed through a PID controller, making speed and steering changes smooth (and slow). The simulation environment runs at all times—including during image processing and inference—leading to a higher latency between image capture and action taking effect.

Version 4: Steering and throttle is managed through a PID controller, but the world is put on hold during inference, reducing the latency.

Version 5: Steering and throttle is managed through a position and velocity controller, and the world is put on hold during inference, almost eliminating latency. (This is very unnatural; the car can take alternating 30 degree left and right turns and will go almost straight ahead.)

The PID controller for v3 and v4 can be changed in the racecar control file. By changing the P, I, and D values, you can tune how fast or how slow the car accelerates and steers.

You can also tune the friction. In our simulator, friction is defined for the wheels, not the surfaces that the car drives on. The values (called mu and mu2) are found in racecar.gazebo; increasing them (once per tire!) will allow the car to drive faster without spinning.

Finally, I implemented an experimental version of the Ackermann steering geometry including differentials. Why? When turning, a car’s wheels follow two circles with the same center point, the inner one is having a smaller radius than the outer one. In short, the inner wheels will have to steer more (larger curvature), but rotate slower (smaller circumference) than the outer wheels.

Customized car software

The initial work to create an altered software stack for the original AWS DeepRacer started in 2022. The first experiments included operating the AWS DeepRacer with an R/C controller and capturing the camera images and IMU data to create an in-car video. There was a lot to learn about ROS2, including creating a custom node for publishing IMU sensor data and capturing and creating videos on the fly. During the Berlin Summit in 2022, I also got to give my modified car a spin on the track!

In the context of physical racing, the motivation for customizing the car software is to obtain more information—what does the car do, and why. Watching the following video, you can clearly see the rolling movement in the turns, and the blurring of certain parts of the image discussed earlier.

The work triggered a need to alter several of the open source AWS DeepRacer packages, and included work such as optimizing the performance from camera to inference through compressing images and enabling GPU and compute stick acceleration of the inference. This turned into several scripts comprising all the changes to the different nodes and creating an upgraded software package that could be installed on an original AWS DeepRacer car.

The work evolved, and a logging mechanism using ROS Bag allowed us to analyze not only pictures, but also the actions that the car took. Using the deepracer-viz library of Jochem Lugtenburg, a fellow AWS DeepRacer community leader, I added a GradCam overlay on the video feed (shown in the following video), which gives a better understanding of what’s going on.

The outcome of this has evolved into the community AWS DeepRacer Custom Car repository, which allows anyone to upgrade their AWS DeepRacer with improved software with two commands and without having to compile the modules themselves!

Benefits are:

Performance improvement by using compressed image transport for the main processing pipeline.

Inference using OpenVINO with Intel GPU (original AWS DeepRacer), OpenVino with Myriad Neural Compute Stick (NCS2), or TensorFlow Lite.

Model Optimizer caching, speeding up switching of models.

Capture in-car camera and inference results to a ROS Bag for logfile analysis.

UI tweaks and fixes.

Support for Raspberry Pi4, enabling us to create the DeepRacer Pi!

Testing on a custom track

Capturing data is great, but you need a way to test it all—bringing models trained in a customized environment onto a track to see what works and what doesn’t.

The question turned out to be: How hard is it to make a track that has the same design as the official tracks, but that takes up less space than the 8m x 6m of the re:Invent 2018 track? After re:Invent 2023, I started to investigate. The goal was to create a custom track that would fit in my garage with a theoretical maximum size of 5.5m x 4.5m. The track should be printable on vinyl in addition to being available in the Simulator for virtual testing.

After some trial and error, it proved to be quite straightforward, even if it requires multiple steps, starting in a Jupyter Notebook, moving into a vector drawing program (Inkscape), and finalizing in Blender (to create the simulator meshes).

The trapezoid track shown in the following two figures (center line and final sketch) is a good example of how to create a brand new track. The notebook starts with eight points in an array and builds out the track step by step, adding the outer line, center line, and color.

In the end I chose to print a narrower version of Trapezoid—Trapezoid Narrow, shown in the following figure—to fit behind my garage, with dimensions of 5.20m x 2.85m including the green borders around the track. I printed it on PVC with a thickness 500 grams per square meter. The comparatively heavy material was a good choice. It prevents folds and wrinkles and generally ensures that the track stays in place even when you walk on it.

Around the track, I added a boundary of mesh PVC mounted on some 20 x 20 centimeter aluminum poles. Not entirely a success, because the light shone through and I needed to add a lining of black fleece. The following image shows the completed track before the addition of black fleece.

Experiments and conclusions

re:Invent is just days away. Experiments are still running, and because I need to fight my way through the Wildcard race, this is not the time to include all the details. Let’s just say that things aren’t always as straightforward as expected.

As a preview of what’s going on, I’ll end this post with the latest iteration of the in-car video, showing a AWS DeepRacer Pi doing laps in the garage. Check back after re:Invent for the big reveal!

About the author

Lars Lorentz Ludvigsen is a technology enthusiast who was introduced to AWS DeepRacer in late 2019 and was instantly hooked. Lars works as a Managing Director at Accenture where he helps clients to build the next generation of smart connected products. In addition to his role at Accenture, he’s an AWS Community Builder who focuses on developing and maintaining the AWS DeepRacer community’s software solutions.