Google’s Agent Development Kit (ADK) gives you the building blocks to create powerful agentic systems. These multi-step agents can plan, loop, collaborate, and call tools dynamically to solve problems on their own. However, this flexibility also makes them unpredictable, leading to potential issues like incomplete outputs, unexpected costs, and security risks. To help you manage this complexity, Datadog LLM Observability now provides automatic instrumentation for systems built with ADK. This integration gives you the visibility to monitor agent behavior, track costs and errors, and optimize agents for response quality and safety through offline experimentation and online evaluation without extensive manual setup.

This is significant as agentic systems are complex, and interagent interactions and the non-deterministic nature of LLMs makes it difficult to predict responses.

Common risks when running these agents include:

-

Pace of change: New foundation models drop weekly and “best-practice” prompting patterns change just as fast. Teams must constantly evaluate new combinations.

-

Multi-agent handoffs: If one agent produces low-quality output, it can cascade downstream and cause other agents to make poor decisions.

-

Loops and retries: Planners can get stuck calling the same tool repeatedly, such as retrying a search query indefinitely, which causes latency spikes.

-

Hidden costs: A single misrouted planner step can multiply token usage or API calls, pushing costs over budget.

-

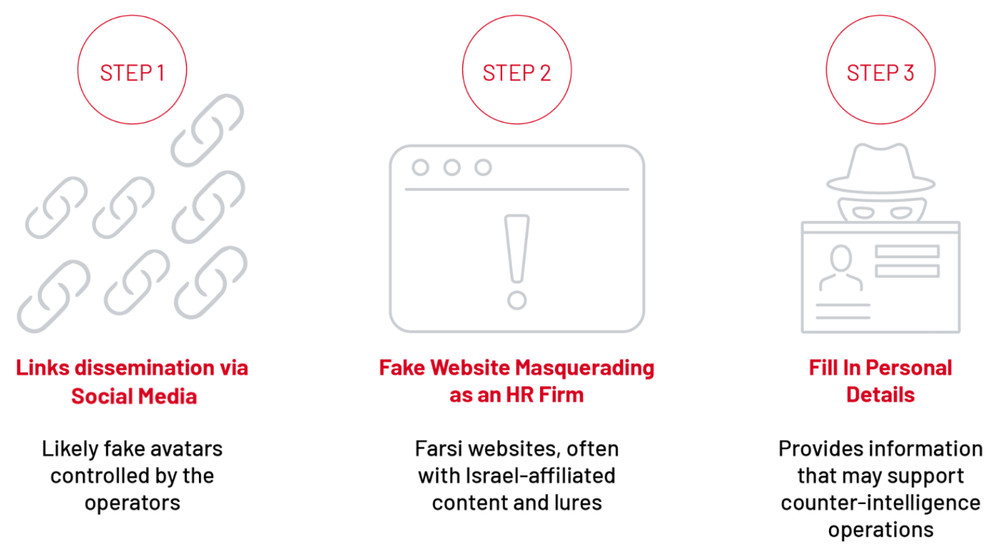

Safety and accuracy: LLM responses may contain hallucinations, sensitive data, or prompt injection attempts, risking security incidents and reduced customer trust.

Finally, ADK is just one of many agentic frameworks available on the market. Having to manually instrument it only adds another learning curve to an already tedious and error-prone process.

Trace agent decisions and unexpected behaviors

Datadog LLM Observability addresses these pains by automatically instrumenting and tracing your ADK agents, so you can start evaluating your agents offline and monitoring them in production in minutes — without code changes. This allows you to visualize every step and planner choice — from agent orchestration to tool calls — on a single trace timeline.

For example, if an agent selects an incorrect tool to respond to a user query, it can yield unexpected errors or inaccurate responses. You can use Datadog’s visualizations to pinpoint the exact step where the incorrect tool was selected, making troubleshooting easier and helping you reproduce the issue.

Monitor token usage and latency

Sudden increases in latency or cost are often a sign of trouble in agentic applications. Datadog lets you view token usage and latency per tool, branch, and workflow to identify where errors happened and how they affected downstream steps.

For example, if a planner agent retries a summarization tool five times, it can significantly increase latency. Datadog highlights these loops, showing you exactly how long they took and the associated cost impact.

Evaluate agent response quality and security

Operational performance metrics like latency are critical monitoring signals, but for a holistic view of how agentic applications are performing, teams also need to evaluate the semantic quality of the LLM and agentic responses. Datadog provides built-in evaluations to detect hallucinations, PII leaks, prompt injections, and unsafe responses.

You can also add custom evaluators, including LLM-as-a-judge evaluators, for domain-specific checks. For instance, if a retrieval agent fetches irrelevant documents that lead to off-topic answers, a custom evaluator can flag that trace as having low retrieval relevance.

Iterate quickly and confidently with experiments

When you roll out a new system prompt, you might notice spikes in latency or drifts in output consistency. Datadog allows you to replay production LLM calls in its Playground to test different models, prompts, or parameters to find the configurations that move you closer to your ideal behavior.

From there, you can run structured experiments to compare versions side-by-side using datasets built from real traffic to optimize operational and functional performance. Because every agent step is logged through ADK instrumentation, you have the full context you need to reproduce regressions and validate fixes before you deploy.

Get started with Datadog LLM Observability

Datadog LLM Observability simplifies monitoring and debugging for Google ADK systems, helping users debug agent operations, evaluate responses, iterate quickly, and validate changes before deploying them to production.

You can get started today with the latest version of the LLM Observability SDK, or start a free trial if you are new to Datadog.

For more information on how to debug agent operations and evaluate responses, view Datadog’s LLM Observability documentation.