Today, we are excited to announce that Pixtral 12B (pixtral-12b-2409), a state-of-the-art vision language model (VLM) from Mistral AI that excels in both text-only and multimodal tasks, is available for customers through Amazon SageMaker JumpStart. You can try this model with SageMaker JumpStart, a machine learning (ML) hub that provides access to algorithms and models that can be deployed with one click for running inference.

In this post, we walk through how to discover, deploy, and use the Pixtral 12B model for a variety of real-world vision use cases.

Pixtral 12B overview

Pixtral 12B represents Mistral’s first VLM and demonstrates strong performance across various benchmarks, outperforming other open models and matching larger models, according to Mistral. Pixtral is trained to understand both images and documents, and shows strong abilities in vision tasks such as chart and figure understanding, document question answering, multimodal reasoning, and instruction following, some of which we demonstrate later in this post with examples. Pixtral 12B is able to ingest images at their natural resolution and aspect ratio. Unlike other open source models, Pixtral doesn’t compromise on text benchmark performance, such as instruction following, coding, and math, to excel in multimodal tasks.

Mistral designed a novel architecture for Pixtral 12B to optimize for both speed and performance. The model has two components: a 400-million-parameter vision encoder, which tokenizes images, and a 12-billion-parameter multimodal transformer decoder, which predicts the next text token given a sequence of text and images. The vision encoder was newly trained that natively supports variable image sizes, which allows Pixtral to be used to accurately understand complex diagrams, charts, and documents in high resolution, and provides fast inference speeds on small images like icons, clipart, and equations. This architecture allows Pixtral to process any number of images with arbitrary sizes in its large context window of 128,000 tokens.

License agreements are a critical decision factor when using open-weights models. Similar to other Mistral models, such as Mistral 7B, Mixtral 8x7B, Mixtral 8x22B and Mistral Nemo 12B, Pixtral 12B is released under the commercially permissive Apache 2.0, providing enterprise and startup customers with a high-performing VLM option to build complex multimodal applications.

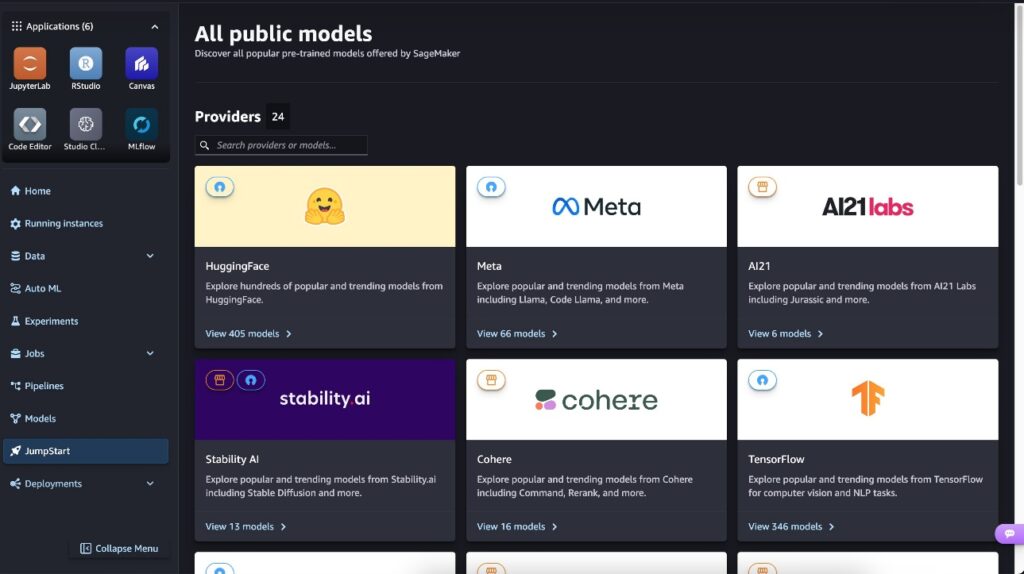

SageMaker JumpStart overview

SageMaker JumpStart offers access to a broad selection of publicly available foundation models (FMs). These pre-trained models serve as powerful starting points that can be deeply customized to address specific use cases. You can now use state-of-the-art model architectures, such as language models, computer vision models, and more, without having to build them from scratch.

With SageMaker JumpStart, you can deploy models in a secure environment. The models can be provisioned on dedicated SageMaker Inference instances, including AWS Trainium and AWS Inferentia powered instances, and are isolated within your virtual private cloud (VPC). This enforces data security and compliance, because the models operate under your own VPC controls, rather than in a shared public environment. After deploying an FM, you can further customize and fine-tune the model, including SageMaker Inference for deploying models and container logs for improved observability.With SageMaker, you can streamline the entire model deployment process. Note that fine-tuning on Pixtral 12B is not yet available (at the time of writing) on SageMaker JumpStart.

Prerequisites

To try out Pixtral 12B in SageMaker JumpStart, you need the following prerequisites:

An AWS account that will contain all your AWS resources.

An AWS Identity and Access Management (IAM) role to access SageMaker. To learn more about how IAM works with SageMaker, refer to Identity and Access Management for Amazon SageMaker.

Access to Amazon SageMaker Studio or a SageMaker notebook instance or an interactive development environment (IDE) such as PyCharm or Visual Studio Code. We recommend using SageMaker Studio for straightforward deployment and inference.

Access to accelerated instances (GPUs) for hosting the model.

Discover Pixtral 12B in SageMaker JumpStart

You can access Pixtral 12B through SageMaker JumpStart in the SageMaker Studio UI and the SageMaker Python SDK. In this section, we go over how to discover the models in SageMaker Studio.

SageMaker Studio is an IDE that provides a single web-based visual interface where you can access purpose-built tools to perform ML development steps, from preparing data to building, training, and deploying your ML models. For more details on how to get started and set up SageMaker Studio, refer to Amazon SageMaker Studio Classic.

In SageMaker Studio, access SageMaker JumpStart by choosing JumpStart in the navigation pane.

Choose HuggingFace to access the Pixtral 12B model.

Search for the Pixtral 12B model.

You can choose the model card to view details about the model such as license, data used to train, and how to use the model.

Choose Deploy to deploy the model and create an endpoint.

Deploy the model in SageMaker JumpStart

Deployment starts when you choose Deploy. When deployment is complete, an endpoint is created. You can test the endpoint by passing a sample inference request payload or by selecting the testing option using the SDK. When you use the SDK, you will see example code that you can use in the notebook editor of your choice in SageMaker Studio.

To deploy using the SDK, we start by selecting the Mistral Nemo Base model, specified by the model_id with the value huggingface-vlm-mistral-pixtral-12b-2409. You can deploy your choice of any of the selected models on SageMaker with the following code:

This deploys the model on SageMaker with default configurations, including the default instance type and default VPC configurations. You can change these configurations by specifying non-default values in JumpStartModel. The end-user license agreement (EULA) value must be explicitly defined as True in order to accept the EULA. Also, make sure that you have the account-level service limit for using ml.p4d.24xlarge or ml.pde.24xlarge for endpoint usage as one or more instances. To request a service quota increase, refer to AWS service quotas. After you deploy the model, you can run inference against the deployed endpoint through the SageMaker predictor.

Pixtral 12B use cases

In this section, we provide examples of inference on Pixtral 12B with example prompts.

OCR

We use the following image as input for OCR.

We use the following prompt:

Chart understanding and analysis

For chart understanding and analysis, we use the following image as input.

We use the following prompt:

We get the following output:

Image to code

For an image-to-code example, we use the following image as input.

We use the following prompt:

Clean up

After you are done, delete the SageMaker endpoints using the following code to avoid incurring unnecessary costs:

Conclusion

In this post, we showed you how to get started with Mistral’s newest multi-modal model, Pixtral 12B, in SageMaker JumpStart and deploy the model for inference. We also explored how SageMaker JumpStart empowers data scientists and ML engineers to discover, access, and deploy a wide range of pre-trained FMs for inference, including other Mistral AI models, such as Mistral 7B and Mixtral 8x22B.

For more information about SageMaker JumpStart, refer to Train, deploy, and evaluate pretrained models with SageMaker JumpStart and Getting started with Amazon SageMaker JumpStart to get started.

For more Mistral assets, check out the Mistral-on-AWS repo.

About the Authors

Preston Tuggle is a Sr. Specialist Solutions Architect working on generative AI.

Niithiyn Vijeaswaran is a GenAI Specialist Solutions Architect at AWS. His area of focus is generative AI and AWS AI Accelerators. He holds a Bachelor’s degree in Computer Science and Bioinformatics. Niithiyn works closely with the Generative AI GTM team to enable AWS customers on multiple fronts and accelerate their adoption of generative AI. He’s an avid fan of the Dallas Mavericks and enjoys collecting sneakers.

Shane Rai is a Principal GenAI Specialist with the AWS World Wide Specialist Organization (WWSO). He works with customers across industries to solve their most pressing and innovative business needs using the breadth of cloud-based AI/ML AWS services, including model offerings from top tier foundation model providers.